Fast batched inference#

The ASE calculator is not necessarily the most efficient way to run a lot of computations. It is better to do a “mass inference” using a command line utility. We illustrate how to do that here.

In this paper we computed about 10K different gold structures:

Boes, J. R., Groenenboom, M. C., Keith, J. A., & Kitchin, J. R. (2016). Neural network and Reaxff comparison for Au properties. Int. J. Quantum Chem., 116(13), 979–987. http://dx.doi.org/10.1002/qua.25115

You can retrieve the dataset below. In this notebook we learn how to do “mass inference” without an ASE calculator. You do this by creating a config.yml file, and running the main.py command line utility.

from fairchem.core.scripts.download_large_files import download_file_group

download_file_group('docs')

docs/tutorials/NRR/NRR_example_bulks.pkl already exists

docs/core/fine-tuning/supporting-information.json already exists

docs/core/data.db already exists

Inference on this file will be fast if we have a gpu, but if we don’t this could take a while. To keep things fast for the automated builds, we’ll just select the first 10 structures so it’s still approachable with just a CPU. Comment or skip this block to use the whole dataset!

! mv data.db full_data.db

import ase.db

import numpy as np

with ase.db.connect('full_data.db') as full_db:

with ase.db.connect('data.db',append=False) as subset_db:

# Select 50 random points for the subset, ASE DB ids start at 1

for i in np.random.choice(list(range(1,len(full_db)+1)),size=50,replace=False):

atoms = full_db.get_atoms(f'id={i}', add_additional_information=True)

if 'tag' in atoms.info['key_value_pairs']:

atoms.info['key_value_pairs']['tag'] = int(atoms.info['key_value_pairs']['tag'])

for key in atoms.info["key_value_pairs"]:

if atoms.info["key_value_pairs"][key] == "True":

atoms.info["key_value_pairs"][key] = True

elif atoms.info["key_value_pairs"][key] == "False":

atoms.info["key_value_pairs"][key] = False

subset_db.write(atoms, **atoms.info['key_value_pairs'])

! ase db data.db

id|age|user |formula|calculator| energy|natoms| fmax|pbc| volume|charge| mass

1| 1s|runner|Au43 |unknown |-112.320| 43|0.342|TTT|6014.312| 0.000|8469.562

2| 0s|runner|Au39 |unknown |-100.465| 39|0.122|TTT|5893.874| 0.000|7681.696

3| 0s|runner|Au4 |unknown | -11.598| 4|0.112|TTT| 156.940| 0.000| 787.866

4| 0s|runner|Au19 |unknown | -46.176| 19|0.131|TTT|4101.856| 0.000|3742.365

5| 0s|runner|Au39 |unknown | -95.532| 39|0.907|TTT|5893.874| 0.000|7681.696

6| 0s|runner|Au16 |unknown | -37.448| 16|0.122|TTT|3923.762| 0.000|3151.465

7| 0s|runner|Au11 |unknown | -34.898| 11|0.021|TTT| 211.976| 0.000|2166.632

8| 0s|runner|Au12 |unknown | -27.060| 12|0.131|TTT|3571.095| 0.000|2363.599

9| 0s|runner|Au12 |unknown | -28.072| 12|0.083|TTT|5639.752| 0.000|2363.599

10| 0s|runner|Au43 |unknown |-106.807| 43|1.011|TTT|6014.312| 0.000|8469.562

11| 0s|runner|Au26 |unknown | -83.110| 26|0.568|TTT| 476.093| 0.000|5121.131

12| 0s|runner|Au47 |unknown |-121.143| 47|0.771|TTT|6374.410| 0.000|9257.429

13| 0s|runner|Au22 |unknown | -54.400| 22|0.030|TTT|4335.494| 0.000|4333.265

14| 0s|runner|Au19 |unknown | -45.505| 19|0.321|TTT|4101.856| 0.000|3742.365

15| 0s|runner|Au32 |unknown | -82.019| 32|0.381|TTT|5385.186| 0.000|6302.930

16| 0s|runner|Au4 |unknown | -11.727| 4|0.142|TTT| 156.940| 0.000| 787.866

17| 0s|runner|Au29 |unknown | -72.840| 29|0.329|TTT|5184.740| 0.000|5712.031

18| 0s|runner|Au33 |unknown | -85.695| 33|0.218|TTT|5480.808| 0.000|6499.897

19| 0s|runner|Au38 |unknown |-100.149| 38|0.338|TTT|5846.048| 0.000|7484.730

20| 0s|runner|Au15 |unknown | -35.021| 15|0.089|TTT|3809.068| 0.000|2954.499

Rows: 50 (showing first 20)

Keys: NEB, bulk, calc_time, cluster, concentration, config, converged, diffusion, ediff, ... (27 more)

You have to choose a checkpoint to start with. The newer checkpoints may require too much memory for this environment.

from fairchem.core.models.model_registry import available_pretrained_models

print(available_pretrained_models)

('CGCNN-S2EF-OC20-200k', 'CGCNN-S2EF-OC20-2M', 'CGCNN-S2EF-OC20-20M', 'CGCNN-S2EF-OC20-All', 'DimeNet-S2EF-OC20-200k', 'DimeNet-S2EF-OC20-2M', 'SchNet-S2EF-OC20-200k', 'SchNet-S2EF-OC20-2M', 'SchNet-S2EF-OC20-20M', 'SchNet-S2EF-OC20-All', 'DimeNet++-S2EF-OC20-200k', 'DimeNet++-S2EF-OC20-2M', 'DimeNet++-S2EF-OC20-20M', 'DimeNet++-S2EF-OC20-All', 'SpinConv-S2EF-OC20-2M', 'SpinConv-S2EF-OC20-All', 'GemNet-dT-S2EF-OC20-2M', 'GemNet-dT-S2EF-OC20-All', 'PaiNN-S2EF-OC20-All', 'GemNet-OC-S2EF-OC20-2M', 'GemNet-OC-S2EF-OC20-All', 'GemNet-OC-S2EF-OC20-All+MD', 'GemNet-OC-Large-S2EF-OC20-All+MD', 'SCN-S2EF-OC20-2M', 'SCN-t4-b2-S2EF-OC20-2M', 'SCN-S2EF-OC20-All+MD', 'eSCN-L4-M2-Lay12-S2EF-OC20-2M', 'eSCN-L6-M2-Lay12-S2EF-OC20-2M', 'eSCN-L6-M2-Lay12-S2EF-OC20-All+MD', 'eSCN-L6-M3-Lay20-S2EF-OC20-All+MD', 'EquiformerV2-83M-S2EF-OC20-2M', 'EquiformerV2-31M-S2EF-OC20-All+MD', 'EquiformerV2-153M-S2EF-OC20-All+MD', 'SchNet-S2EF-force-only-OC20-All', 'DimeNet++-force-only-OC20-All', 'DimeNet++-Large-S2EF-force-only-OC20-All', 'DimeNet++-S2EF-force-only-OC20-20M+Rattled', 'DimeNet++-S2EF-force-only-OC20-20M+MD', 'CGCNN-IS2RE-OC20-10k', 'CGCNN-IS2RE-OC20-100k', 'CGCNN-IS2RE-OC20-All', 'DimeNet-IS2RE-OC20-10k', 'DimeNet-IS2RE-OC20-100k', 'DimeNet-IS2RE-OC20-all', 'SchNet-IS2RE-OC20-10k', 'SchNet-IS2RE-OC20-100k', 'SchNet-IS2RE-OC20-All', 'DimeNet++-IS2RE-OC20-10k', 'DimeNet++-IS2RE-OC20-100k', 'DimeNet++-IS2RE-OC20-All', 'PaiNN-IS2RE-OC20-All', 'GemNet-dT-S2EFS-OC22', 'GemNet-OC-S2EFS-OC22', 'GemNet-OC-S2EFS-OC20+OC22', 'GemNet-OC-S2EFS-nsn-OC20+OC22', 'GemNet-OC-S2EFS-OC20->OC22', 'EquiformerV2-lE4-lF100-S2EFS-OC22', 'SchNet-S2EF-ODAC', 'DimeNet++-S2EF-ODAC', 'PaiNN-S2EF-ODAC', 'GemNet-OC-S2EF-ODAC', 'eSCN-S2EF-ODAC', 'EquiformerV2-S2EF-ODAC', 'EquiformerV2-Large-S2EF-ODAC', 'Gemnet-OC-IS2RE-ODAC', 'eSCN-IS2RE-ODAC', 'EquiformerV2-IS2RE-ODAC')

from fairchem.core.models.model_registry import model_name_to_local_file

checkpoint_path = model_name_to_local_file('GemNet-dT-S2EFS-OC22', local_cache='/tmp/fairchem_checkpoints/')

checkpoint_path

'/tmp/fairchem_checkpoints/gndt_oc22_all_s2ef.pt'

We have to update our configuration yml file with the test dataset.

from fairchem.core.common.tutorial_utils import generate_yml_config

yml = generate_yml_config(checkpoint_path, 'config.yml',

delete=['cmd', 'logger', 'task', 'model_attributes',

'dataset', 'slurm'],

update={'amp': True,

'gpus': 1,

'task.prediction_dtype': 'float32',

'logger':'tensorboard', # don't use wandb!

# Test data - prediction only so no regression

'dataset.test.src': 'data.db',

'dataset.test.format': 'ase_db',

'dataset.test.a2g_args.r_energy': False,

'dataset.test.a2g_args.r_forces': False,

'dataset.test.select_args.selection': 'natoms>5,xc=PBE',

})

yml

/home/runner/work/fairchem/fairchem/src/fairchem/core/models/scn/spherical_harmonics.py:23: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

_Jd = torch.load(os.path.join(os.path.dirname(__file__), "Jd.pt"))

/home/runner/work/fairchem/fairchem/src/fairchem/core/models/equiformer_v2/wigner.py:10: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

_Jd = torch.load(os.path.join(os.path.dirname(__file__), "Jd.pt"))

/home/runner/work/fairchem/fairchem/src/fairchem/core/models/escn/so3.py:23: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

_Jd = torch.load(os.path.join(os.path.dirname(__file__), "Jd.pt"))

/home/runner/work/fairchem/fairchem/src/fairchem/core/common/relaxation/ase_utils.py:191: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

checkpoint = torch.load(checkpoint_path, map_location=torch.device("cpu"))

WARNING:root:Detected old config, converting to new format. Consider updating to avoid potential incompatibilities.

INFO:root:amp: true

cmd:

checkpoint_dir: /home/runner/work/fairchem/fairchem/docs/core/checkpoints/2025-01-21-19-37-36

commit: c162617

identifier: ''

logs_dir: /home/runner/work/fairchem/fairchem/docs/core/logs/wandb/2025-01-21-19-37-36

print_every: 100

results_dir: /home/runner/work/fairchem/fairchem/docs/core/results/2025-01-21-19-37-36

seed: null

timestamp_id: 2025-01-21-19-37-36

version: 0.1.dev1+gc162617

dataset:

format: oc22_lmdb

key_mapping:

force: forces

y: energy

normalize_labels: false

oc20_ref: /checkpoint/janlan/ocp/other_data/final_ref_energies_02_07_2021.pkl

raw_energy_target: true

evaluation_metrics:

metrics:

energy:

- mae

forces:

- forcesx_mae

- forcesy_mae

- forcesz_mae

- mae

- cosine_similarity

- magnitude_error

misc:

- energy_forces_within_threshold

primary_metric: forces_mae

gp_gpus: null

gpus: 0

logger: wandb

loss_functions:

- energy:

coefficient: 1

fn: mae

- forces:

coefficient: 1

fn: l2mae

model:

activation: silu

cbf:

name: spherical_harmonics

cutoff: 6.0

direct_forces: true

emb_size_atom: 512

emb_size_bil_trip: 64

emb_size_cbf: 16

emb_size_edge: 512

emb_size_rbf: 16

emb_size_trip: 64

envelope:

exponent: 5

name: polynomial

extensive: true

max_neighbors: 50

name: gemnet_t

num_after_skip: 2

num_atom: 3

num_before_skip: 1

num_blocks: 3

num_concat: 1

num_radial: 128

num_spherical: 7

otf_graph: true

output_init: HeOrthogonal

rbf:

name: gaussian

regress_forces: true

optim:

batch_size: 16

clip_grad_norm: 10

ema_decay: 0.999

energy_coefficient: 1

eval_batch_size: 16

eval_every: 5000

force_coefficient: 1

loss_energy: mae

loss_force: atomwisel2

lr_gamma: 0.8

lr_initial: 0.0005

lr_milestones:

- 64000

- 96000

- 128000

- 160000

- 192000

max_epochs: 80

num_workers: 2

optimizer: AdamW

optimizer_params:

amsgrad: true

warmup_steps: -1

outputs:

energy:

level: system

forces:

eval_on_free_atoms: true

level: atom

train_on_free_atoms: true

relax_dataset: {}

slurm:

additional_parameters:

constraint: volta32gb

cpus_per_task: 3

folder: /checkpoint/abhshkdz/ocp_oct1_logs/57864354

gpus_per_node: 8

job_id: '57864354'

job_name: gndt_oc22_s2ef

mem: 480GB

nodes: 2

ntasks_per_node: 8

partition: ocp

time: 4320

task:

dataset: oc22_lmdb

eval_on_free_atoms: true

primary_metric: forces_mae

train_on_free_atoms: true

test_dataset: {}

trainer: ocp

val_dataset: {}

INFO:root:Loading model: gemnet_t

INFO:root:Loaded GemNetT with 31671825 parameters.

INFO:root:Loading checkpoint in inference-only mode, not loading keys associated with trainer state!

INFO:root:Overwriting scaling factors with those loaded from checkpoint. If you're generating predictions with a pretrained checkpoint, this is the correct behavior. To disable this, delete `scale_dict` from the checkpoint.

WARNING:root:Scale factor comment not found in model

WARNING:root:No seed has been set in modelcheckpoint or OCPCalculator! Results may not be reproducible on re-run

PosixPath('/home/runner/work/fairchem/fairchem/docs/core/config.yml')

It is a good idea to redirect the output to a file. If the output gets too large here, the notebook may fail to save. Normally I would use a redirect like 2&>1, but this does not work with the main.py method. An alternative here is to open a terminal and run it there. If you have a gpu or multiple gpus, you should use the flag –num-gpus=

%%capture inference

import time

from fairchem.core.common.tutorial_utils import fairchem_main

t0 = time.time()

! python {fairchem_main()} --mode predict --config-yml {yml} --checkpoint {checkpoint_path} --cpu

print(f'Elapsed time = {time.time() - t0:1.1f} seconds')

with open('mass-inference.txt', 'wb') as f:

f.write(inference.stdout.encode('utf-8'))

! grep "Total time taken:" 'mass-inference.txt'

2025-01-21 19:37:33 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/utils.py:1112: (INFO): Total time taken: 7.040971517562866

The mass inference approach takes 1-2 minutes to run. See the output here.

results = ! grep " results_dir:" mass-inference.txt

d = results[0].split(':')[-1].strip()

import numpy as np

results = np.load(f'{d}/ocp_predictions.npz', allow_pickle=True)

results.files

['energy', 'forces', 'chunk_idx', 'ids']

It is not obvious, but the data from mass inference is not in the same order. We have to get an id from the mass inference, and then “resort” the results so they are in the same order.

inds = np.array([int(r.split('_')[0]) for r in results['ids']])

sind = np.argsort(inds)

inds[sind]

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16,

17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33,

34, 35, 36, 37])

To compare this with the results, we need to get the energy data from the ase db.

from ase.db import connect

db = connect('data.db')

energies = np.array([row.energy for row in db.select('natoms>5,xc=PBE')])

natoms = np.array([row.natoms for row in db.select('natoms>5,xc=PBE')])

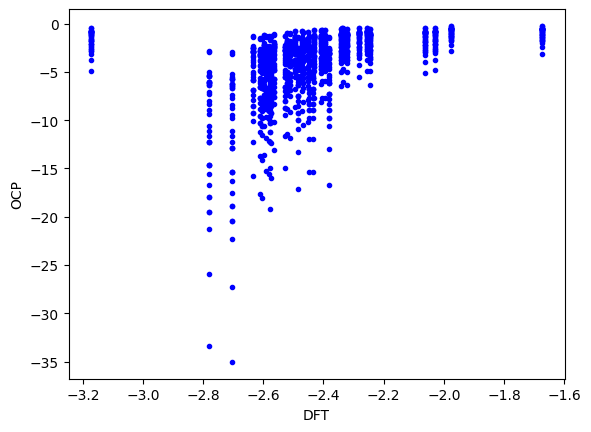

Now, we can see the predictions. They are only ok here; that is not surprising, the data set has lots of Au configurations that have never been seen by this model. Fine-tuning would certainly help improve this.

import matplotlib.pyplot as plt

plt.plot(energies / natoms, results['energy'][sind] / natoms, 'b.')

plt.xlabel('DFT')

plt.ylabel('OCP');

The ASE calculator way#

We include this here just to show that:

We get the same results

That this is much slower.

from fairchem.core.common.relaxation.ase_utils import OCPCalculator

calc = OCPCalculator(checkpoint_path=checkpoint_path, cpu=False)

/home/runner/work/fairchem/fairchem/src/fairchem/core/common/relaxation/ase_utils.py:191: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

checkpoint = torch.load(checkpoint_path, map_location=torch.device("cpu"))

WARNING:root:Detected old config, converting to new format. Consider updating to avoid potential incompatibilities.

INFO:root:amp: true

cmd:

checkpoint_dir: /home/runner/work/fairchem/fairchem/docs/core/checkpoints/2025-01-21-19-37-36

commit: c162617

identifier: ''

logs_dir: /home/runner/work/fairchem/fairchem/docs/core/logs/wandb/2025-01-21-19-37-36

print_every: 100

results_dir: /home/runner/work/fairchem/fairchem/docs/core/results/2025-01-21-19-37-36

seed: null

timestamp_id: 2025-01-21-19-37-36

version: 0.1.dev1+gc162617

dataset:

format: oc22_lmdb

key_mapping:

force: forces

y: energy

normalize_labels: false

oc20_ref: /checkpoint/janlan/ocp/other_data/final_ref_energies_02_07_2021.pkl

raw_energy_target: true

evaluation_metrics:

metrics:

energy:

- mae

forces:

- forcesx_mae

- forcesy_mae

- forcesz_mae

- mae

- cosine_similarity

- magnitude_error

misc:

- energy_forces_within_threshold

primary_metric: forces_mae

gp_gpus: null

gpus: 0

logger: wandb

loss_functions:

- energy:

coefficient: 1

fn: mae

- forces:

coefficient: 1

fn: l2mae

model:

activation: silu

cbf:

name: spherical_harmonics

cutoff: 6.0

direct_forces: true

emb_size_atom: 512

emb_size_bil_trip: 64

emb_size_cbf: 16

emb_size_edge: 512

emb_size_rbf: 16

emb_size_trip: 64

envelope:

exponent: 5

name: polynomial

extensive: true

max_neighbors: 50

name: gemnet_t

num_after_skip: 2

num_atom: 3

num_before_skip: 1

num_blocks: 3

num_concat: 1

num_radial: 128

num_spherical: 7

otf_graph: true

output_init: HeOrthogonal

rbf:

name: gaussian

regress_forces: true

optim:

batch_size: 16

clip_grad_norm: 10

ema_decay: 0.999

energy_coefficient: 1

eval_batch_size: 16

eval_every: 5000

force_coefficient: 1

loss_energy: mae

loss_force: atomwisel2

lr_gamma: 0.8

lr_initial: 0.0005

lr_milestones:

- 64000

- 96000

- 128000

- 160000

- 192000

max_epochs: 80

num_workers: 2

optimizer: AdamW

optimizer_params:

amsgrad: true

warmup_steps: -1

outputs:

energy:

level: system

forces:

eval_on_free_atoms: true

level: atom

train_on_free_atoms: true

relax_dataset: {}

slurm:

additional_parameters:

constraint: volta32gb

cpus_per_task: 3

folder: /checkpoint/abhshkdz/ocp_oct1_logs/57864354

gpus_per_node: 8

job_id: '57864354'

job_name: gndt_oc22_s2ef

mem: 480GB

nodes: 2

ntasks_per_node: 8

partition: ocp

time: 4320

task:

dataset: oc22_lmdb

eval_on_free_atoms: true

primary_metric: forces_mae

train_on_free_atoms: true

test_dataset: {}

trainer: ocp

val_dataset: {}

INFO:root:Loading model: gemnet_t

INFO:root:Loaded GemNetT with 31671825 parameters.

INFO:root:Loading checkpoint in inference-only mode, not loading keys associated with trainer state!

INFO:root:Overwriting scaling factors with those loaded from checkpoint. If you're generating predictions with a pretrained checkpoint, this is the correct behavior. To disable this, delete `scale_dict` from the checkpoint.

WARNING:root:Scale factor comment not found in model

WARNING:root:No seed has been set in modelcheckpoint or OCPCalculator! Results may not be reproducible on re-run

import time

from tqdm import tqdm

t0 = time.time()

OCP, DFT = [], []

for row in tqdm(db.select('natoms>5,xc=PBE')):

atoms = row.toatoms()

atoms.set_calculator(calc)

DFT += [row.energy / len(atoms)]

OCP += [atoms.get_potential_energy() / len(atoms)]

print(f'Elapsed time {time.time() - t0:1.1} seconds')

0it [00:00, ?it/s]

/tmp/ipykernel_3490/402181013.py:7: FutureWarning: Please use atoms.calc = calc

atoms.set_calculator(calc)

1it [00:00, 2.55it/s]

2it [00:00, 2.96it/s]

3it [00:00, 3.83it/s]

4it [00:01, 3.67it/s]

5it [00:01, 4.43it/s]

6it [00:01, 4.47it/s]

7it [00:01, 5.21it/s]

8it [00:01, 5.91it/s]

9it [00:02, 4.81it/s]

10it [00:02, 3.97it/s]

11it [00:02, 4.06it/s]

12it [00:02, 4.70it/s]

13it [00:02, 4.73it/s]

14it [00:03, 4.35it/s]

15it [00:03, 4.24it/s]

16it [00:03, 3.90it/s]

17it [00:03, 4.49it/s]

18it [00:04, 4.28it/s]

19it [00:04, 3.97it/s]

20it [00:04, 4.47it/s]

21it [00:04, 4.50it/s]

23it [00:05, 6.47it/s]

24it [00:05, 5.67it/s]

25it [00:05, 4.63it/s]

26it [00:05, 5.39it/s]

27it [00:05, 5.68it/s]

28it [00:06, 4.69it/s]

29it [00:06, 5.15it/s]

30it [00:06, 5.34it/s]

32it [00:06, 6.24it/s]

33it [00:06, 6.06it/s]

34it [00:07, 5.14it/s]

35it [00:07, 5.34it/s]

36it [00:08, 3.16it/s]

37it [00:08, 2.32it/s]

38it [00:09, 2.55it/s]

38it [00:09, 4.21it/s]

Elapsed time 9e+00 seconds

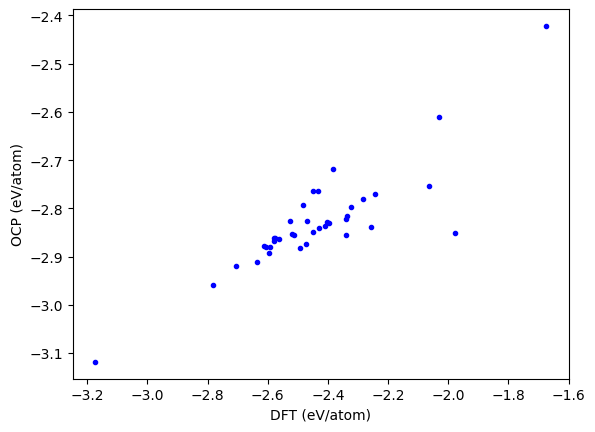

This takes at least twice as long as the mass-inference approach above. It is conceptually simpler though, and does not require resorting.

plt.plot(DFT, OCP, 'b.')

plt.xlabel('DFT (eV/atom)')

plt.ylabel('OCP (eV/atom)');

Comparing ASE calculator and main.py#

The results should be the same.

It is worth noting the default precision of predictions is float16 with main.py, but with the ASE calculator the default precision is float32. Supposedly you can specify --task.prediction_dtype=float32 at the command line to or specify it in the config.yml like we do above, but as of the tutorial this does not resolve the issue.

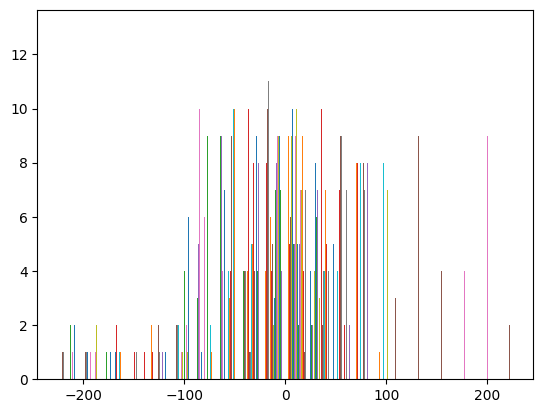

As noted above (see also Issue 542), the ASE calculator and main.py use different precisions by default, which can lead to small differences.

np.mean(np.abs(results['energy'][sind] - OCP * natoms)) # MAE

51.59992049473475

np.min(results['energy'][sind] - OCP * natoms), np.max(results['energy'][sind] - OCP * natoms)

(-225.3068141937256, 225.30681228637695)

plt.hist(results['energy'][sind] - OCP * natoms, bins=20);

Here we see many of the differences are very small. 0.0078125 = 1 / 128, and these errors strongly suggest some kind of mixed precision is responsible for these differences. It is an open issue to remove them and identify where the cause is.

(results['energy'][sind] - OCP * natoms)[0:400]

array([[ 0.00000000e+00, -1.21671219e+01, -6.97637062e+01, ...,

1.10070107e+02, 1.21540062e+02, -1.47758255e+01],

[ 1.21671219e+01, 0.00000000e+00, -5.75965843e+01, ...,

1.22237228e+02, 1.33707184e+02, -2.60870361e+00],

[ 6.97637100e+01, 5.75965881e+01, 3.81469727e-06, ...,

1.79833817e+02, 1.91303772e+02, 5.49878845e+01],

...,

[-1.10070107e+02, -1.22237228e+02, -1.79833813e+02, ...,

0.00000000e+00, 1.14699554e+01, -1.24845932e+02],

[-1.21540062e+02, -1.33707184e+02, -1.91303768e+02, ...,

-1.14699554e+01, 0.00000000e+00, -1.36315887e+02],

[ 1.47758255e+01, 2.60870361e+00, -5.49878807e+01, ...,

1.24845932e+02, 1.36315887e+02, 0.00000000e+00]])