# MIT License

#

#@title Copyright (c) 2021 CCAI Community Authors { display-mode: "form" }

#

# Permission is hereby granted, free of charge, to any person obtaining a

# copy of this software and associated documentation files (the "Software"),

# to deal in the Software without restriction, including without limitation

# the rights to use, copy, modify, merge, publish, distribute, sublicense,

# and/or sell copies of the Software, and to permit persons to whom the

# Software is furnished to do so, subject to the following conditions:

#

# The above copyright notice and this permission notice shall be included in

# all copies or substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

# THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

# FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

# DEALINGS IN THE SOFTWARE.

Open Catalyst Project Tutorial Notebook#

Author(s):

Muhammed Shuaibi, CMU, mshuaibi@andrew.cmu.edu

Abhishek Das, FAIR, abhshkdz@fb.com

Adeesh Kolluru, CMU, akolluru@andrew.cmu.edu

Brandon Wood, NERSC, bwood@lbl.gov

Janice Lan, FAIR, janlan@fb.com

Anuroop Sriram, FAIR, anuroops@fb.com

Zachary Ulissi, CMU, zulissi@andrew.cmu.edu

Larry Zitnick, FAIR, zitnick@fb.com

FAIR - Facebook AI Research

CMU - Carnegie Mellon University

NERSC - National Energy Research Scientific Computing Center

Background #

The discovery of efficient and economic catalysts (materials) are needed to enable the widespread use of renewable energy technologies. A common approach in discovering high performance catalysts is using molecular simulations. Specifically, each simulation models the interaction of a catalyst surface with molecules that are commonly seen in electrochemical reactions. By predicting these interactions accurately, the catalyst’s impact on the overall rate of a chemical reaction may be estimated.

An important quantity in screening catalysts is their adsorption energy for the molecules, referred to as `adsorbates’, involved in the reaction of interest. The adsorption energy may be found by simulating the interaction of the adsorbate molecule on the surface of the catalyst to find their resting or relaxed energy, i.e., how tightly the adsorbate binds to the catalyst’s surface (visualized below). The rate of the chemical reaction, a value of high practical importance, is then commonly approximated using simple functions of the adsorption energy. The goal of this tutorial specifically and the project overall is to encourage research and benchmark progress towards training ML models to approximate this relaxation.

Specifically, during the course of a relaxation, given an initial set of atoms and their positions, the task is to iteratively estimate atomic forces and update atomic positions until a relaxed state is reached. The energy corresponding to the relaxed state is the structure’s ‘relaxed energy’.

As part of the Open Catalyst Project (OCP), we identify three key tasks ML models need to perform well on in order to effectively approximate DFT –

Given an Initial Structure, predict the Relaxed Energy of the relaxed strucutre (IS2RE),

Given an Initial Structure, predict the Relaxed Structure (IS2RS),

Given any Structure, predict the structure Energy and per-atom Forces (S2EF).

Objective #

This notebook serves as a tutorial for interacting with the Open Catalyst Project.

By the end of this tutorial, users will have gained:

Intuition to the dataset and it’s properties

Knowledge of the various OCP tasks: IS2RE, IS2RS, S2EF

Steps to train, validate, and predict a model on the various tasks

A walkthrough on creating your own model

(Optional) Creating your own dataset for other molecular/catalyst applications

(Optional) Using pretrained models directly with an [ASE](https://wiki.fysik.dtu.dk/ase/#:~:text=The%20Atomic%20Simulation%20Environment%20(ASE,under%20the%20GNU%20LGPL%20license.)-style calculator.

Climate Impact#

Scalable and cost-effective solutions to renewable energy storage are essential to addressing the world’s rising energy needs while reducing climate change. As illustrated in the figure below, as we increase our reliance on renewable energy sources such as wind and solar, which produce intermittent power, storage is needed to transfer power from times of peak generation to peak demand. This may require the storage of power for hours, days, or months. One solution that offers the potential of scaling to nation-sized grids is the conversion of renewable energy to other fuels, such as hydrogen. To be widely adopted, this process requires cost-effective solutions to running chemical reactions.

An open challenge is finding low-cost catalysts to drive these reactions at high rates. Through the use of quantum mechanical simulations (Density Functional Theory, DFT), new catalyst structures can be tested and evaluated. Unfortunately, the high computational cost of these simulations limits the number of structures that may be tested. The use of AI or machine learning may provide a method to efficiently approximate these calculations; reducing the time required from 24} hours to a second. This capability would transform the search for new catalysts from the present day practice of evaluating O(1,000) of handpicked candidates to the brute force search over millions or even billions of candidates.

As part of OCP, we publicly released the world’s largest quantum mechanical simulation dataset – OC20 – in the Fall of 2020 along with a suite of baselines and evaluation metrics. The creation of the dataset required over 70 million hours of compute. This dataset enables the exploration of techniques that will generalize across different catalyst materials and adsorbates. If successful, models trained on the dataset could enable the computational testing of millions of catalyst materials for a wide variety of chemical reactions. However, techniques that achieve the accuracies required** for practical impact are still beyond reach and remain an open area for research, thus encouraging research in this important area to help in meeting the world’s energy needs in the decades ahead.

** The computational catalysis community often aims for an adsorption energy MAE of 0.1-0.2 eV for practical relevance.

Target Audience#

This tutorial is designed for those interested in application of ML towards climate change. More specifically, those interested in material/catalyst discovery and Graph Nueral Networks (GNNs) will find lots of benefit here. Little to no domain chemistry knowledge is necessary as it will be covered in the tutorial. Experience with GNNs is a plus but not required.

We have designed this notebook in a manner to get the ML communnity up to speed as far as background knowledge is concerned, and the catalysis community to better understand how to use the OCP’s state-of-the-art models in their everyday workflows.

Background & Prerequisites#

Basic experience training ML models. Familiarity with PyTorch. Familiarity with Pytorch-Geometric could be helpful for development, but not required. No background in chemistry is assumed.

For those looking to apply our pretrained models on their datasets, familiarity with the [Atomic Simulation Environment](https://wiki.fysik.dtu.dk/ase/#:~:text=The%20Atomic%20Simulation%20Environment%20(ASE,under%20the%20GNU%20LGPL%20license.) is useful.

Background References#

To gain an even better understanding of the Open Catalyst Project and the problems it seeks to address, we strongly recommend the following resources:

To learn more about electrocatalysis, see our white paper.

To learn about the OC20 dataset and the associated tasks, please see the OC20 dataset paper.

Software Requirements#

See installation for installation instructions!

import torch

torch.cuda.is_available()

False

Dataset Overview#

The Open Catalyst 2020 Dataset (OC20) will be used throughout this tutorial. More details can be found here and the corresponding paper. Data is stored in PyTorch Geometric Data objects and stored in LMDB files. For each task we include several sized training splits. Validation/Test splits are broken into several subsplits: In Domain (ID), Out of Domain Adsorbate (OOD-Ads), Out of Domain Catalyast (OOD-Cat) and Out of Domain Adsorbate and Catalyst (OOD-Both). Split sizes are summarized below:

Train

S2EF - 200k, 2M, 20M, 134M(All)

IS2RE/IS2RS - 10k, 100k, 460k(All)

Val/Test

S2EF - ~1M across all subsplits

IS2RE/IS2RS - ~25k across all splits

Tutorial Use#

For the sake of this tutorial we provide much smaller splits (100 train, 20 val for all tasks) to allow users to easily store, train, and predict across the various tasks. Please refer here for details on how to download the full datasets for general use.

Data Download [~1min] #

FOR TUTORIAL USE ONLY

%%bash

mkdir data

cd data

wget -q http://dl.fbaipublicfiles.com/opencatalystproject/data/tutorial_data.tar.gz -O tutorial_data.tar.gz

tar -xzvf tutorial_data.tar.gz

rm tutorial_data.tar.gz

mkdir: cannot create directory ‘data’: File exists

./

./is2re/

./is2re/train_100/

./is2re/train_100/data.lmdb

./is2re/train_100/data.lmdb-lock

./is2re/val_20/

./is2re/val_20/data.lmdb

./is2re/val_20/data.lmdb-lock

./s2ef/

./s2ef/train_100/

./s2ef/train_100/data.lmdb

./s2ef/train_100/data.lmdb-lock

./s2ef/val_20/

./s2ef/val_20/data.lmdb

./s2ef/val_20/data.lmdb-lock

Data Visualization #

import matplotlib

matplotlib.use('Agg')

import os

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

params = {

'axes.labelsize': 14,

'font.size': 14,

'font.family': ' DejaVu Sans',

'legend.fontsize': 20,

'xtick.labelsize': 20,

'ytick.labelsize': 20,

'axes.labelsize': 25,

'axes.titlesize': 25,

'text.usetex': False,

'figure.figsize': [12, 12]

}

matplotlib.rcParams.update(params)

import ase.io

from ase.io.trajectory import Trajectory

from ase.io import extxyz

from ase.calculators.emt import EMT

from ase.build import fcc100, add_adsorbate, molecule

from ase.constraints import FixAtoms

from ase.optimize import LBFGS

from ase.visualize.plot import plot_atoms

from ase import Atoms

from IPython.display import Image

Understanding the data#

We use the Atomic Simulation Environment (ASE) library to interact with our data. This notebook will provide you with some intuition on how atomic data is generated, how the data is structured, how to visualize the data, and the specific properties that are passed on to our models.

Generating sample data#

The OC20 dataset was generated using density functional theory (DFT), a quantum chemistry method for modeling atomistic environments. For more details, please see our dataset paper. In this notebook, we generate sample data in the same format as the OC20 dataset; however, we use a faster method that is less accurate called effective-medium theory (EMT) because our DFT calculations are too computationally expensive to run here. EMT is great for demonstration purposes but not accurate enough for our actual catalysis applications. Below is a structural relaxation of a catalyst system, a propane (C3H8) adsorbate on a copper (Cu) surface. Throughout this tutorial a surface may be referred to as a slab and the combination of an adsorbate and a surface as an adslab.

Structural relaxations#

A structural relaxation or structure optimization is the process of iteratively updating atom positions to find the atom positions that minimize the energy of the structure. Standard optimization methods are used in structural relaxations — below we use the Limited-Memory Broyden–Fletcher–Goldfarb–Shanno (LBFGS) algorithm. The step number, time, energy, and force max are printed at each optimization step. Each step is considered one example because it provides all the information we need to train models for the S2EF task and the entire set of steps is referred to as a trajectory. Visualizing intermediate structures or viewing the entire trajectory can be illuminating to understand what is physically happening and to look for problems in the simulation, especially when we run ML-driven relaxations. Common problems one may look out for - atoms excessively overlapping/colliding with each other and atoms flying off into random directions.

###DATA GENERATION - FEEL FREE TO SKIP###

# This cell sets up and runs a structural relaxation

# of a propane (C3H8) adsorbate on a copper (Cu) surface

adslab = fcc100("Cu", size=(3, 3, 3))

adsorbate = molecule("C3H8")

add_adsorbate(adslab, adsorbate, 3, offset=(1, 1)) # adslab = adsorbate + slab

# tag all slab atoms below surface as 0, surface as 1, adsorbate as 2

tags = np.zeros(len(adslab))

tags[18:27] = 1

tags[27:] = 2

adslab.set_tags(tags)

# Fixed atoms are prevented from moving during a structure relaxation.

# We fix all slab atoms beneath the surface.

cons= FixAtoms(indices=[atom.index for atom in adslab if (atom.tag == 0)])

adslab.set_constraint(cons)

adslab.center(vacuum=13.0, axis=2)

adslab.set_pbc(True)

adslab.set_calculator(EMT())

os.makedirs('data', exist_ok=True)

# Define structure optimizer - LBFGS. Run for 100 steps,

# or if the max force on all atoms (fmax) is below 0 ev/A.

# fmax is typically set to 0.01-0.05 eV/A,

# for this demo however we run for the full 100 steps.

dyn = LBFGS(adslab, trajectory="data/toy_c3h8_relax.traj")

dyn.run(fmax=0, steps=100)

traj = ase.io.read("data/toy_c3h8_relax.traj", ":")

# convert traj format to extxyz format (used by OC20 dataset)

columns = (['symbols','positions', 'move_mask', 'tags', 'forces'])

with open('data/toy_c3h8_relax.extxyz','w') as f:

extxyz.write_xyz(f, traj, columns=columns)

Step Time Energy fmax

LBFGS: 0 04:43:29 15.804700 6.776430

LBFGS: 1 04:43:29 12.190607 4.323222

LBFGS: 2 04:43:29 10.240169 2.265527

LBFGS: 3 04:43:29 9.779223 0.937247

LBFGS: 4 04:43:29 9.671525 0.770173

LBFGS: 5 04:43:29 9.574461 0.663540

LBFGS: 6 04:43:29 9.537502 0.571800

LBFGS: 7 04:43:29 9.516673 0.446620

LBFGS: 8 04:43:29 9.481330 0.461143

LBFGS: 9 04:43:29 9.462255 0.293081

LBFGS: 10 04:43:29 9.448937 0.249010

LBFGS: 11 04:43:29 9.433813 0.237051

LBFGS: 12 04:43:29 9.418884 0.260245

LBFGS: 13 04:43:29 9.409649 0.253162

LBFGS: 14 04:43:29 9.404838 0.162398

LBFGS: 15 04:43:29 9.401753 0.182298

LBFGS: 16 04:43:29 9.397314 0.259163

LBFGS: 17 04:43:29 9.387947 0.345022

/tmp/ipykernel_3934/747130225.py:23: FutureWarning: Please use atoms.calc = calc

adslab.set_calculator(EMT())

LBFGS: 18 04:43:29 9.370825 0.407041

LBFGS: 19 04:43:29 9.342222 0.433340

LBFGS: 20 04:43:29 9.286822 0.500200

LBFGS: 21 04:43:29 9.249910 0.524052

LBFGS: 22 04:43:29 9.187179 0.511994

LBFGS: 23 04:43:29 9.124811 0.571796

LBFGS: 24 04:43:29 9.066185 0.540934

LBFGS: 25 04:43:30 9.000116 1.079833

LBFGS: 26 04:43:30 8.893632 0.752759

LBFGS: 27 04:43:30 8.845939 0.332051

LBFGS: 28 04:43:30 8.815173 0.251242

LBFGS: 29 04:43:30 8.808721 0.214337

LBFGS: 30 04:43:30 8.794643 0.154611

LBFGS: 31 04:43:30 8.789162 0.201404

LBFGS: 32 04:43:30 8.782320 0.175517

LBFGS: 33 04:43:30 8.780394 0.103718

LBFGS: 34 04:43:30 8.778410 0.107611

LBFGS: 35 04:43:30 8.775079 0.179747

LBFGS: 36 04:43:30 8.766987 0.333401

LBFGS: 37 04:43:30 8.750249 0.530715

LBFGS: 38 04:43:30 8.725928 0.685116

LBFGS: 39 04:43:30 8.702312 0.582260

LBFGS: 40 04:43:30 8.661515 0.399625

LBFGS: 41 04:43:30 8.643432 0.558474

LBFGS: 42 04:43:30 8.621201 0.367288

LBFGS: 43 04:43:30 8.614414 0.139424

LBFGS: 44 04:43:30 8.610785 0.137160

LBFGS: 45 04:43:30 8.608134 0.146375

LBFGS: 46 04:43:30 8.604928 0.119648

LBFGS: 47 04:43:30 8.599151 0.135424

LBFGS: 48 04:43:30 8.594063 0.147913

LBFGS: 49 04:43:30 8.589493 0.153840

LBFGS: 50 04:43:30 8.587274 0.088460

LBFGS: 51 04:43:30 8.584633 0.093750

LBFGS: 52 04:43:30 8.580239 0.140870

LBFGS: 53 04:43:30 8.572938 0.254272

LBFGS: 54 04:43:30 8.563343 0.291885

LBFGS: 55 04:43:30 8.554117 0.196557

LBFGS: 56 04:43:30 8.547597 0.129064

LBFGS: 57 04:43:30 8.542086 0.128020

LBFGS: 58 04:43:30 8.535432 0.098202

LBFGS: 59 04:43:30 8.533622 0.127672

LBFGS: 60 04:43:30 8.527487 0.116729

LBFGS: 61 04:43:30 8.523863 0.121762

LBFGS: 62 04:43:30 8.519229 0.130541

LBFGS: 63 04:43:30 8.515424 0.101902

LBFGS: 64 04:43:30 8.511240 0.212223

LBFGS: 65 04:43:30 8.507967 0.266593

LBFGS: 66 04:43:30 8.503903 0.237734

LBFGS: 67 04:43:30 8.497575 0.162253

LBFGS: 68 04:43:30 8.485434 0.202203

LBFGS: 69 04:43:30 8.466738 0.215895

LBFGS: 70 04:43:30 8.467607 0.334764

LBFGS: 71 04:43:30 8.454037 0.106310

LBFGS: 72 04:43:30 8.448980 0.119721

LBFGS: 73 04:43:30 8.446550 0.099221

LBFGS: 74 04:43:30 8.444705 0.056244

LBFGS: 75 04:43:30 8.443403 0.038831

LBFGS: 76 04:43:30 8.442646 0.054772

LBFGS: 77 04:43:30 8.442114 0.061370

LBFGS: 78 04:43:30 8.440960 0.058800

LBFGS: 79 04:43:30 8.439820 0.048198

LBFGS: 80 04:43:30 8.438600 0.051251

LBFGS: 81 04:43:30 8.437429 0.054130

LBFGS: 82 04:43:30 8.435695 0.067234

LBFGS: 83 04:43:30 8.431957 0.085678

LBFGS: 84 04:43:30 8.423485 0.133240

LBFGS: 85 04:43:30 8.413846 0.207812

LBFGS: 86 04:43:30 8.404849 0.178747

LBFGS: 87 04:43:30 8.385339 0.169017

LBFGS: 88 04:43:30 8.386849 0.187645

LBFGS: 89 04:43:30 8.371078 0.118124

LBFGS: 90 04:43:30 8.368801 0.094222

LBFGS: 91 04:43:30 8.366226 0.066960

LBFGS: 92 04:43:30 8.361680 0.054964

LBFGS: 93 04:43:30 8.360631 0.047342

LBFGS: 94 04:43:30 8.359692 0.024179

LBFGS: 95 04:43:30 8.359361 0.015549

LBFGS: 96 04:43:30 8.359163 0.014284

LBFGS: 97 04:43:30 8.359102 0.015615

LBFGS: 98 04:43:30 8.359048 0.015492

LBFGS: 99 04:43:30 8.358986 0.014214

LBFGS: 100 04:43:30 8.358921 0.013159

/opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages/ase/io/extxyz.py:311: UserWarning: Skipping unhashable information adsorbate_info

warnings.warn('Skipping unhashable information '

Reading a trajectory#

identifier = "toy_c3h8_relax.extxyz"

# the `index` argument corresponds to what frame of the trajectory to read in, specifiying ":" reads in the full trajectory.

traj = ase.io.read(f"data/{identifier}", index=":")

Viewing a trajectory#

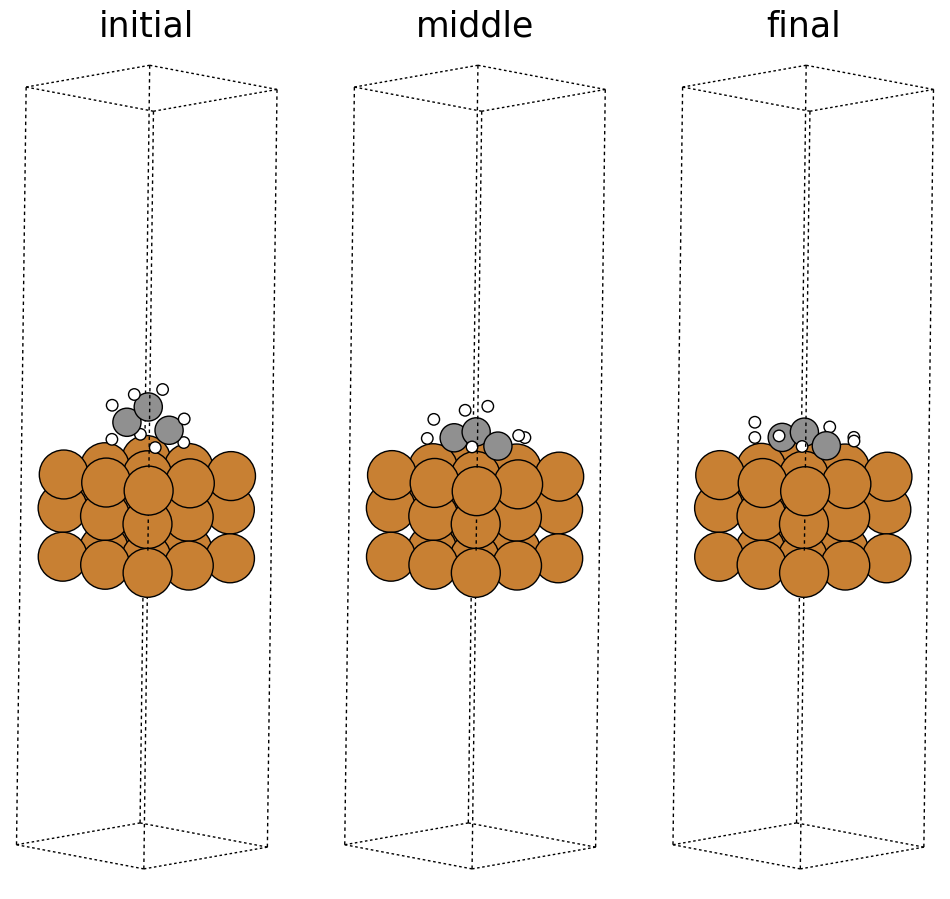

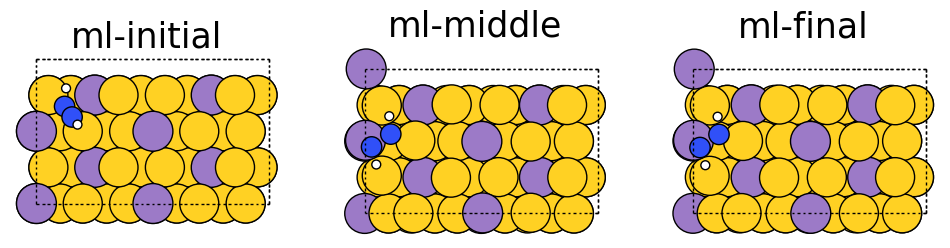

Below we visualize the initial, middle, and final steps in the structural relaxation trajectory from above. Copper atoms in the surface are colored orange, the propane adsorbate on the surface has grey colored carbon atoms and white colored hydrogen atoms. The adsorbate’s structure changes during the simulation and you can see how it relaxes on the surface. In this case, the relaxation looks normal; however, there can be instances where the adsorbate flies away (desorbs) from the surface or the adsorbate can break apart (dissociation), which are hard to detect without visualization. Additionally, visualizations can be used as a quick sanity check to ensure the initial system is set up correctly and there are no major issues with the simulation.

fig, ax = plt.subplots(1, 3)

labels = ['initial', 'middle', 'final']

for i in range(3):

ax[i].axis('off')

ax[i].set_title(labels[i])

ase.visualize.plot.plot_atoms(traj[0],

ax[0],

radii=0.8,

rotation=("-75x, 45y, 10z"))

ase.visualize.plot.plot_atoms(traj[50],

ax[1],

radii=0.8,

rotation=("-75x, 45y, 10z"))

ase.visualize.plot.plot_atoms(traj[-1],

ax[2],

radii=0.8,

rotation=("-75x, 45y, 10z"))

<Axes: title={'center': 'final'}>

Data contents #

Here we take a closer look at what information is contained within these trajectories.

i_structure = traj[0]

i_structure

Atoms(symbols='Cu27C3H8', pbc=True, cell=[7.65796644025031, 7.65796644025031, 33.266996999999996], tags=..., constraint=FixAtoms(indices=[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17]), calculator=SinglePointCalculator(...))

Atomic numbers#

numbers = i_structure.get_atomic_numbers()

print(numbers)

[29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29 29

29 29 29 6 6 6 1 1 1 1 1 1 1 1]

Atomic symbols#

symbols = np.array(i_structure.get_chemical_symbols())

print(symbols)

['Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu'

'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'Cu' 'C' 'C'

'C' 'H' 'H' 'H' 'H' 'H' 'H' 'H' 'H']

Unit cell#

The unit cell is the volume containing our system of interest. Express as a 3x3 array representing the directional vectors that make up the volume. Illustrated as the dashed box in the above visuals.

cell = np.array(i_structure.cell)

print(cell)

[[ 7.65796644 0. 0. ]

[ 0. 7.65796644 0. ]

[ 0. 0. 33.266997 ]]

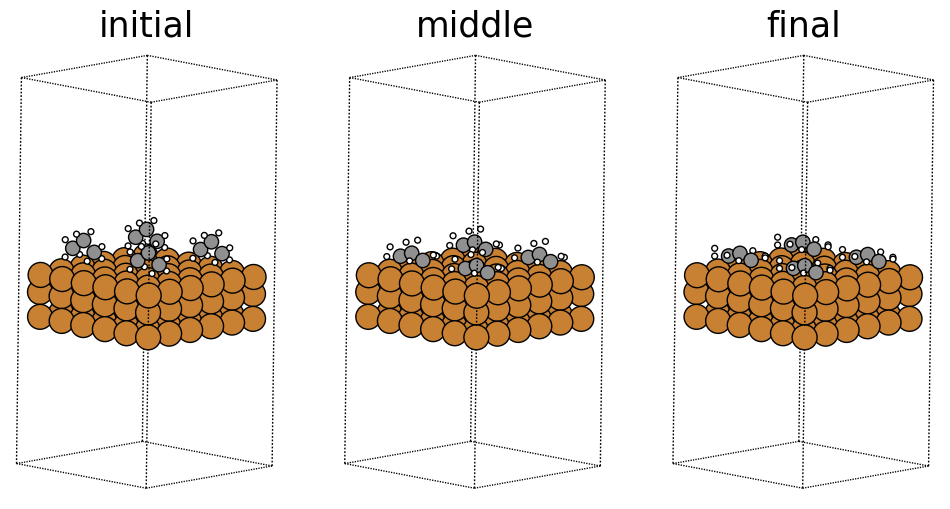

Periodic boundary conditions (PBC)#

x,y,z boolean representing whether a unit cell repeats in the corresponding directions. The OC20 dataset sets this to [True, True, True], with a large enough vacuum layer above the surface such that a unit cell does not see itself in the z direction. Although the original structure shown above is what get’s passed into our models, the presence of PBC allows it to effectively repeat infinitely in the x and y directions. Below we visualize the same structure with a periodicity of 2 in all directions, what the model may effectively see.

pbc = i_structure.pbc

print(pbc)

[ True True True]

fig, ax = plt.subplots(1, 3)

labels = ['initial', 'middle', 'final']

for i in range(3):

ax[i].axis('off')

ax[i].set_title(labels[i])

ase.visualize.plot.plot_atoms(traj[0].repeat((2,2,1)),

ax[0],

radii=0.8,

rotation=("-75x, 45y, 10z"))

ase.visualize.plot.plot_atoms(traj[50].repeat((2,2,1)),

ax[1],

radii=0.8,

rotation=("-75x, 45y, 10z"))

ase.visualize.plot.plot_atoms(traj[-1].repeat((2,2,1)),

ax[2],

radii=0.8,

rotation=("-75x, 45y, 10z"))

<Axes: title={'center': 'final'}>

Fixed atoms constraint#

In reality, surfaces contain many, many more atoms beneath what we’ve illustrated as the surface. At an infinite depth, these subsurface atoms would look just like the bulk structure. We approximate a true surface by fixing the subsurface atoms into their “bulk” locations. This ensures that they cannot move at the “bottom” of the surface. If they could, this would throw off our calculations. Consistent with the above, we fix all atoms with tags=0, and denote them as “fixed”. All other atoms are considered “free”.

cons = i_structure.constraints[0]

print(cons, '\n')

# indices of fixed atoms

indices = cons.index

print(indices, '\n')

# fixed atoms correspond to tags = 0

print(tags[indices])

FixAtoms(indices=[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17])

[ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17]

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

Adsorption energy#

The energy of the system is one of the properties of interest in the OC20 dataset. It’s important to note that absolute energies provide little value to researchers and must be referenced properly to be useful. The OC20 dataset references all it’s energies to the bare slab + gas references to arrive at adsorption energies. Adsorption energies are important in studying catalysts and their corresponding reaction rates. In addition to the structure relaxations of the OC20 dataset, bare slab and gas (N2, H2, H2O, CO) relaxations were carried out with DFT in order to calculate adsorption energies.

final_structure = traj[-1]

relaxed_energy = final_structure.get_potential_energy()

print(f'Relaxed absolute energy = {relaxed_energy} eV')

# Corresponding raw slab used in original adslab (adsorbate+slab) system.

raw_slab = fcc100("Cu", size=(3, 3, 3))

raw_slab.set_calculator(EMT())

raw_slab_energy = raw_slab.get_potential_energy()

print(f'Raw slab energy = {raw_slab_energy} eV')

adsorbate = Atoms("C3H8").get_chemical_symbols()

# For clarity, we define arbitrary gas reference energies here.

# A more detailed discussion of these calculations can be found in the corresponding paper's SI.

gas_reference_energies = {'H': .3, 'O': .45, 'C': .35, 'N': .50}

adsorbate_reference_energy = 0

for ads in adsorbate:

adsorbate_reference_energy += gas_reference_energies[ads]

print(f'Adsorbate reference energy = {adsorbate_reference_energy} eV\n')

adsorption_energy = relaxed_energy - raw_slab_energy - adsorbate_reference_energy

print(f'Adsorption energy: {adsorption_energy} eV')

Relaxed absolute energy = 8.358921451410891 eV

Raw slab energy = 8.127167122749576 eV

Adsorbate reference energy = 3.4499999999999993 eV

Adsorption energy: -3.2182456713386838 eV

/tmp/ipykernel_3934/2478225434.py:7: FutureWarning: Please use atoms.calc = calc

raw_slab.set_calculator(EMT())

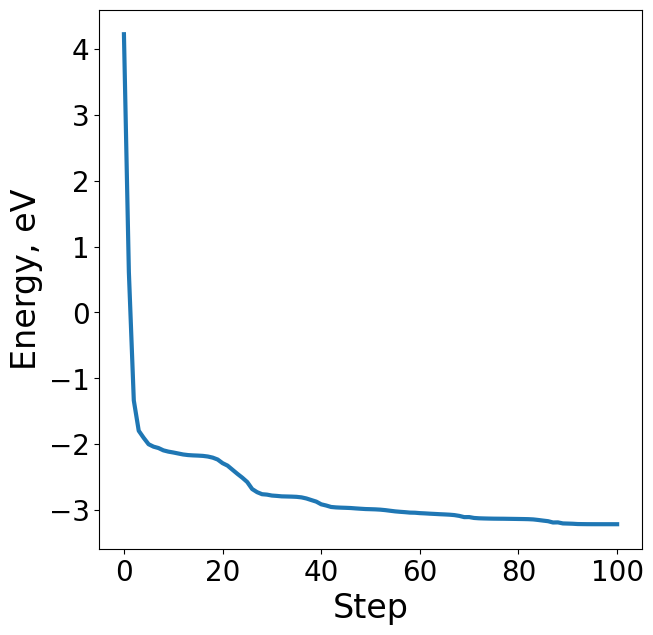

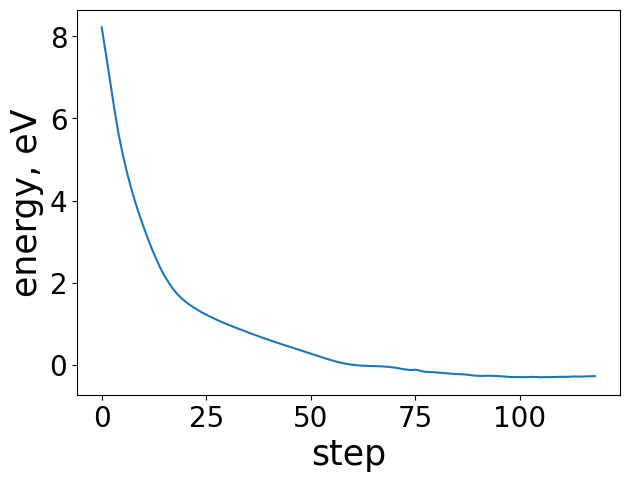

Plot energy profile of toy trajectory#

Plotting the energy profile of our trajectory is a good way to ensure nothing strange has occured. We expect to see a decreasing monotonic function. If the energy is consistently increasing or there’s multiple large spikes this could be a sign of some issues in the optimization. This is particularly useful for when analyzing ML-driven relaxations and whether they make general physical sense.

energies = [image.get_potential_energy() - raw_slab_energy - adsorbate_reference_energy for image in traj]

plt.figure(figsize=(7, 7))

plt.plot(range(len(energies)), energies, lw=3)

plt.xlabel("Step", fontsize=24)

plt.ylabel("Energy, eV", fontsize=24)

Text(0, 0.5, 'Energy, eV')

Force#

Forces are another important property of the OC20 dataset. Unlike datasets like QM9 which contain only ground state properties, the OC20 dataset contains per-atom forces necessary to carry out atomistic simulations. Physically, forces are the negative gradient of energy w.r.t atomic positions: \(F = -\frac{dE}{dx}\). Although not mandatory (depending on the application), maintaining this energy-force consistency is important for models that seek to make predictions on both properties.

The “apply_constraint” argument controls whether to apply system constraints to the forces. In the OC20 dataset, this controls whether to return forces for fixed atoms (apply_constraint=False) or return 0s (apply_constraint=True).

# Returning forces for all atoms - regardless of whether "fixed" or "free"

i_structure.get_forces(apply_constraint=False)

array([[-1.07900000e-05, -3.80000000e-06, 1.13560540e-01],

[ 0.00000000e+00, -4.29200000e-05, 1.13302410e-01],

[ 1.07900000e-05, -3.80000000e-06, 1.13560540e-01],

[-1.84600000e-05, 0.00000000e+00, 1.13543430e-01],

[ 0.00000000e+00, 0.00000000e+00, 1.13047800e-01],

[ 1.84600000e-05, -0.00000000e+00, 1.13543430e-01],

[-1.07900000e-05, 3.80000000e-06, 1.13560540e-01],

[-0.00000000e+00, 4.29200000e-05, 1.13302410e-01],

[ 1.07900000e-05, 3.80000000e-06, 1.13560540e-01],

[-1.10430500e-02, -2.53094000e-03, -4.84573700e-02],

[ 1.10430500e-02, -2.53094000e-03, -4.84573700e-02],

[-0.00000000e+00, -2.20890000e-04, -2.07827000e-03],

[-1.10430500e-02, 2.53094000e-03, -4.84573700e-02],

[ 1.10430500e-02, 2.53094000e-03, -4.84573700e-02],

[-0.00000000e+00, 2.20890000e-04, -2.07827000e-03],

[-3.49808000e-03, -0.00000000e+00, -7.85544000e-03],

[ 3.49808000e-03, -0.00000000e+00, -7.85544000e-03],

[-0.00000000e+00, -0.00000000e+00, -5.97640000e-04],

[-3.18144370e-01, -2.36420450e-01, -3.97089230e-01],

[ 0.00000000e+00, -2.18895316e+00, -2.74768262e+00],

[ 3.18144370e-01, -2.36420450e-01, -3.97089230e-01],

[-5.65980520e-01, 0.00000000e+00, -6.16046990e-01],

[ 0.00000000e+00, -0.00000000e+00, -4.47152822e+00],

[ 5.65980520e-01, 0.00000000e+00, -6.16046990e-01],

[-3.18144370e-01, 2.36420450e-01, -3.97089230e-01],

[-0.00000000e+00, 2.18895316e+00, -2.74768262e+00],

[ 3.18144370e-01, 2.36420450e-01, -3.97089230e-01],

[-0.00000000e+00, 0.00000000e+00, -3.96835355e+00],

[-0.00000000e+00, -3.64190926e+00, 5.71458646e+00],

[-0.00000000e+00, 3.64190926e+00, 5.71458646e+00],

[-2.18178516e+00, 0.00000000e+00, 1.67589182e+00],

[ 2.18178516e+00, 0.00000000e+00, 1.67589182e+00],

[ 0.00000000e+00, 2.46333681e+00, 1.78299828e+00],

[ 0.00000000e+00, -2.46333681e+00, 1.78299828e+00],

[ 6.18714050e+00, 2.26336330e-01, -5.99485570e-01],

[-6.18714050e+00, 2.26336330e-01, -5.99485570e-01],

[-6.18714050e+00, -2.26336330e-01, -5.99485570e-01],

[ 6.18714050e+00, -2.26336330e-01, -5.99485570e-01]])

# Applying the fixed atoms constraint to the forces

i_structure.get_forces(apply_constraint=True)

array([[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[-0.31814437, -0.23642045, -0.39708923],

[ 0. , -2.18895316, -2.74768262],

[ 0.31814437, -0.23642045, -0.39708923],

[-0.56598052, 0. , -0.61604699],

[ 0. , -0. , -4.47152822],

[ 0.56598052, 0. , -0.61604699],

[-0.31814437, 0.23642045, -0.39708923],

[-0. , 2.18895316, -2.74768262],

[ 0.31814437, 0.23642045, -0.39708923],

[-0. , 0. , -3.96835355],

[-0. , -3.64190926, 5.71458646],

[-0. , 3.64190926, 5.71458646],

[-2.18178516, 0. , 1.67589182],

[ 2.18178516, 0. , 1.67589182],

[ 0. , 2.46333681, 1.78299828],

[ 0. , -2.46333681, 1.78299828],

[ 6.1871405 , 0.22633633, -0.59948557],

[-6.1871405 , 0.22633633, -0.59948557],

[-6.1871405 , -0.22633633, -0.59948557],

[ 6.1871405 , -0.22633633, -0.59948557]])

Interacting with the OC20 datasets#

The OC20 datasets are stored in LMDBs. Here we show how to interact with the datasets directly in order to better understand the data. We use LmdbDataset to read in a directory of LMDB files or a single LMDB file.

from fairchem.core.datasets import LmdbDataset

# LmdbDataset is our custom Dataset method to read the lmdbs as Data objects. Note that we need to give the path to the folder containing lmdbs for S2EF

dataset = LmdbDataset({"src": "data/s2ef/train_100/"})

print("Size of the dataset created:", len(dataset))

print(dataset[0])

Size of the dataset created: 100

Data(edge_index=[2, 2964], y=6.282500615000004, pos=[86, 3], cell=[1, 3, 3], atomic_numbers=[86], natoms=86, cell_offsets=[2964, 3], force=[86, 3], fixed=[86], tags=[86], sid=[1], fid=[1], total_frames=74, id='0_0')

data = dataset[0]

data

Data(edge_index=[2, 2964], y=6.282500615000004, pos=[86, 3], cell=[1, 3, 3], atomic_numbers=[86], natoms=86, cell_offsets=[2964, 3], force=[86, 3], fixed=[86], tags=[86], sid=[1], fid=[1], total_frames=74, id='0_0')

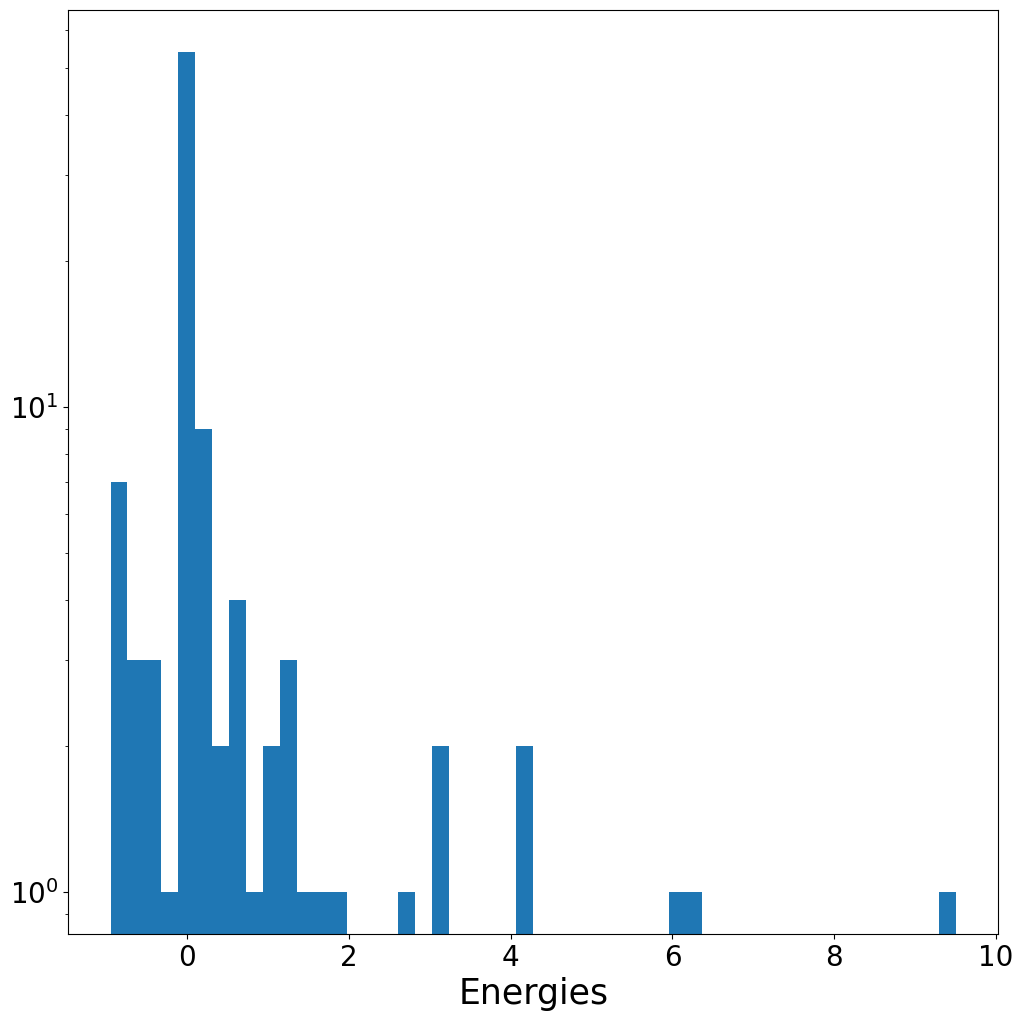

energies = torch.tensor([data.y for data in dataset])

energies

tensor([ 6.2825e+00, 4.1290e+00, 3.1451e+00, 3.0260e+00, 1.7921e+00,

1.6451e+00, 1.2257e+00, 1.2161e+00, 1.0712e+00, 7.4727e-01,

5.9575e-01, 5.7016e-01, 4.2819e-01, 3.1616e-01, 2.5283e-01,

2.2425e-01, 2.2346e-01, 2.0530e-01, 1.6090e-01, 1.1807e-01,

1.1691e-01, 9.1254e-02, 7.4997e-02, 6.3274e-02, 5.2782e-02,

4.8892e-02, 3.9609e-02, 3.1746e-02, 2.7179e-02, 2.7007e-02,

2.3709e-02, 1.8005e-02, 1.7676e-02, 1.4129e-02, 1.3162e-02,

1.1374e-02, 7.4124e-03, 7.7525e-03, 6.1224e-03, 5.2787e-03,

2.8587e-03, 1.1835e-04, -1.1200e-03, -1.3011e-03, -2.6812e-03,

-5.9202e-03, -6.1644e-03, -6.9261e-03, -9.1364e-03, -9.2114e-03,

-1.0665e-02, -1.3760e-02, -1.3588e-02, -1.4895e-02, -1.6190e-02,

-1.8660e-02, -1.4980e-02, -1.8880e-02, -2.0218e-02, -2.0559e-02,

-2.1013e-02, -2.2129e-02, -2.2748e-02, -2.3322e-02, -2.3382e-02,

-2.3865e-02, -2.3973e-02, -2.4196e-02, -2.4755e-02, -2.4951e-02,

-2.5078e-02, -2.5148e-02, -2.5257e-02, -2.5550e-02, 5.9721e+00,

9.5081e+00, 2.6373e+00, 4.0946e+00, 1.4385e+00, 1.2700e+00,

1.0081e+00, 5.3797e-01, 5.1462e-01, 2.8812e-01, 1.2429e-01,

-1.1352e-02, -2.2293e-01, -3.9102e-01, -4.3574e-01, -5.3142e-01,

-5.4777e-01, -6.3948e-01, -7.3816e-01, -8.2163e-01, -8.2526e-01,

-8.8313e-01, -8.8615e-01, -9.3446e-01, -9.5100e-01, -9.5168e-01])

plt.hist(energies, bins = 50)

plt.yscale("log")

plt.xlabel("Energies")

plt.show()

Additional Resources#

More helpful resources, tutorials, and documentation can be found at ASE’s webpage: https://wiki.fysik.dtu.dk/ase/index.html. We point to specific pages that may be of interest:

Interacting with Atoms Object: https://wiki.fysik.dtu.dk/ase/ase/atoms.html

Visualization: https://wiki.fysik.dtu.dk/ase/ase/visualize/visualize.html

Structure optimization: https://wiki.fysik.dtu.dk/ase/ase/optimize.html

More ASE Tutorials: https://wiki.fysik.dtu.dk/ase/tutorials/tutorials.html

Tasks#

In this section, we cover the different types of tasks the OC20 dataset presents and how to train and predict their corresponding models.

Structure to Energy and Forces (S2EF)

Initial Structure to Relaxed Energy (IS2RE)

Initial Structure to Relaxed Structure (IS2RS)

Tasks can be interrelated. The figure below illustrates several approaches to solving the IS2RE task:

(a) the traditional approach uses DFT along with an optimizer, such as BFGS or conjugate gradient, to iteratively update the atom positions until the relaxed structure and energy are found.

(b) using ML models trained to predict the energy and forces of a structure, S2EF can be used as a direct replacement for DFT.

(c) the relaxed structure could potentially be directly regressed from the initial structure and S2EF used to find the energy.

(d) directly compute the relaxed energy from the initial state.

NOTE The following sections are intended to demonstrate the inner workings of our codebase and what goes into running the various tasks. We do not recommend training to completion within a notebook setting. Please see the running on command line section for the preferred way to train/evaluate models.

Structure to Energy and Forces (S2EF) #

The S2EF task takes an atomic system as input and predicts the energy of the entire system and forces on each atom. This is our most general task, ultimately serving as a surrogate to DFT. A model that can perform well on this task can accelerate other applications like molecular dynamics and transitions tate calculations.

Steps for training an S2EF model#

Define or load a configuration (config), which includes the following

task

model

optimizer

dataset

trainer

Create a ForcesTrainer object

Train the model

Validate the model

For storage and compute reasons we use a very small subset of the OC20 S2EF dataset for this tutorial. Results will be considerably worse than presented in our paper.

Imports#

from fairchem.core.trainers import OCPTrainer

from fairchem.core.datasets import LmdbDataset

from fairchem.core import models

from fairchem.core.common import logger

from fairchem.core.common.utils import setup_logging, setup_imports

setup_logging()

setup_imports()

import numpy as np

import copy

import os

2025-03-29 04:43:38 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/utils.py:359: (INFO): Project root: /home/runner/work/fairchem/fairchem/src/fairchem

/home/runner/work/fairchem/fairchem/src/fairchem/core/models/scn/spherical_harmonics.py:23: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

_Jd = torch.load(os.path.join(os.path.dirname(__file__), "Jd.pt"))

/home/runner/work/fairchem/fairchem/src/fairchem/core/models/equiformer_v2/wigner.py:10: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

_Jd = torch.load(os.path.join(os.path.dirname(__file__), "Jd.pt"))

/home/runner/work/fairchem/fairchem/src/fairchem/core/models/escn/so3.py:23: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

_Jd = torch.load(os.path.join(os.path.dirname(__file__), "Jd.pt"))

Dataset#

train_src = "data/s2ef/train_100"

val_src = "data/s2ef/val_20"

Normalize data#

If you wish to normalize the targets we must compute the mean and standard deviation for our energy values. Because forces are physically related by the negative gradient of energy, we use the same multiplicative energy factor for forces.

train_dataset = LmdbDataset({"src": train_src})

energies = []

for data in train_dataset:

energies.append(data.y)

mean = np.mean(energies)

stdev = np.std(energies)

Define the Config#

For this example, we will explicitly define the config. Default config yaml files can easily be loaded with the following build_config utility. Loading a yaml config is preferable when launching jobs from the command line. We have included a set of default configs for our best models’ here.

We will also use a scaling files found here. Lets download it locally,

%%bash

wget https://github.com/FAIR-Chem/fairchem/raw/main/configs/oc20/s2ef/all/gemnet/scaling_factors/gemnet-oc-large.pt

wget https://github.com/FAIR-Chem/fairchem/raw/main/configs/oc20/s2ef/all/gemnet/scaling_factors/gemnet-oc.pt

wget https://github.com/FAIR-Chem/fairchem/raw/main/configs/oc20/s2ef/all/gemnet/scaling_factors/gemnet-dT.json

--2025-03-29 04:43:38-- https://github.com/FAIR-Chem/fairchem/raw/main/configs/oc20/s2ef/all/gemnet

/scaling_factors/gemnet-oc-large.pt

Resolving github.com (github.com)...

140.82.116.3

Connecting to github.com (github.com)|140.82.116.3|:443...

connected.

HTTP request sent, awaiting response...

302 Found

Location: https://raw.githubusercontent.com/FAIR-Chem/fairchem/main/configs/oc20/s2ef/all/

gemnet/scaling_factors/gemnet-oc-large.pt [following]

--2025-03-29 04:43:38-- https://raw.githubusercontent.com/FAIR-Chem/fairchem/main/configs/oc20/s2ef

/all/gemnet/scaling_factors/gemnet-oc-large.pt

Resolving raw.githubusercontent.com (raw.githubuserco

ntent.com)...

185.199.109.133, 185.199.110.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.

githubusercontent.com)|185.199.109.133|:443...

connected.

HTTP request sent, awaiting response...

200 OK

Length:

27199 (27K) [application/octet-stream]

Saving to: ‘gemnet-oc-large.pt’

0K .......... .......... ...... 1

00% 92.2M=0s

2025-03-29 04:43:38 (92.2 MB/s) - ‘gemnet-oc-large.pt’ saved [27199/27199]

--2025-03-29 04:43:38-- https://github.com/FAIR-Chem/fairchem/raw/main/configs/oc20/s2ef/all/gemnet

/scaling_factors/gemnet-oc.pt

Resolving github.com (github.com)...

140.82.116.3

Connecting to github.com (github.com)|140.82.116.3|:443...

connected.

HTTP request sent, awaiting response...

302 Found

Location: https://raw.githubusercontent.com/FAIR-Chem/fairchem/main/configs/oc20/s2ef/all/

gemnet/scaling_factors/gemnet-oc.pt [following]

--2025-03-29 04:43:39-- https://raw.githubusercontent.com/FAIR-Chem/fairchem/main/configs/oc20/s2ef

/all/gemnet/scaling_factors/gemnet-oc.pt

Resolving raw.githubusercontent.com (raw.githubusercontent.

com)... 185.199.110.133, 185.199.111.133, 185.199.109.133, ...

Connecting to raw.githubusercontent.c

om (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response...

200 OK

Length: 16963 (17K) [application/octet-stream]

Saving to: ‘gemnet-oc.pt’

0K .......... ...

... 100% 98.0M=0s

2025-03-29 04:43:39 (98.0 MB/s) - ‘gemnet-o

c.pt’ saved [16963/16963]

--2025-03-29 04:43:39-- https://github.com/FAIR-Chem/fairchem/raw/main/configs/oc20/s2ef/all/gemnet

/scaling_factors/gemnet-dT.json

Resolving github.com (github.com)...

140.82.116.3

Connecting to github.com (github.com)|140.82.116.3|:443...

connected.

HTTP request sent, awaiting response...

302 Found

Location: https://raw.githubusercontent.com/FAIR-Chem/fairchem/main/configs/oc20/s2ef/all/

gemnet/scaling_factors/gemnet-dT.json [following]

--2025-03-29 04:43:39-- https://raw.githubusercontent.com/FAIR-Chem/fairchem/main/configs/oc20/s2ef

/all/gemnet/scaling_factors/gemnet-dT.json

Resolving raw.githubusercontent.com (raw.githubuserconten

t.com)... 185.199.111.133, 185.199.108.133, 185.199.110.133, ...

Connecting to raw.githubusercontent

.com (raw.githubusercontent.com)|185.199.111.133|:443...

connected.

HTTP request sent, awaiting response...

200 OK

Length: 816 [text/plain]

Saving to: ‘gemnet-dT.json’

0K

100% 50.5M=0s

2025-03-29 04:43:39 (50.5 MB/s) - ‘gemnet-dT.json’ saved [816

/816]

Note - we only train for a single epoch with a reduced batch size (GPU memory constraints) for demonstration purposes, modify accordingly for full convergence.

# Task

task = {

'dataset': 'lmdb', # dataset used for the S2EF task

'description': 'Regressing to energies and forces for DFT trajectories from OCP',

'type': 'regression',

'metric': 'mae',

'labels': ['potential energy'],

'grad_input': 'atomic forces',

'train_on_free_atoms': True,

'eval_on_free_atoms': True

}

# Model

model = {

"name": "gemnet_oc",

"num_spherical": 7,

"num_radial": 128,

"num_blocks": 4,

"emb_size_atom": 64,

"emb_size_edge": 64,

"emb_size_trip_in": 64,

"emb_size_trip_out": 64,

"emb_size_quad_in": 32,

"emb_size_quad_out": 32,

"emb_size_aint_in": 64,

"emb_size_aint_out": 64,

"emb_size_rbf": 16,

"emb_size_cbf": 16,

"emb_size_sbf": 32,

"num_before_skip": 2,

"num_after_skip": 2,

"num_concat": 1,

"num_atom": 3,

"num_output_afteratom": 3,

"cutoff": 12.0,

"cutoff_qint": 12.0,

"cutoff_aeaint": 12.0,

"cutoff_aint": 12.0,

"max_neighbors": 30,

"max_neighbors_qint": 8,

"max_neighbors_aeaint": 20,

"max_neighbors_aint": 1000,

"rbf": {

"name": "gaussian"

},

"envelope": {

"name": "polynomial",

"exponent": 5

},

"cbf": {"name": "spherical_harmonics"},

"sbf": {"name": "legendre_outer"},

"extensive": True,

"output_init": "HeOrthogonal",

"activation": "silu",

"regress_forces": True,

"direct_forces": True,

"forces_coupled": False,

"quad_interaction": True,

"atom_edge_interaction": True,

"edge_atom_interaction": True,

"atom_interaction": True,

"num_atom_emb_layers": 2,

"num_global_out_layers": 2,

"qint_tags": [1, 2],

"scale_file": "./gemnet-oc.pt"

}

# Optimizer

optimizer = {

'batch_size': 1, # originally 32

'eval_batch_size': 1, # originally 32

'num_workers': 2,

'lr_initial': 5.e-4,

'optimizer': 'AdamW',

'optimizer_params': {"amsgrad": True},

'scheduler': "ReduceLROnPlateau",

'mode': "min",

'factor': 0.8,

'patience': 3,

'max_epochs': 1, # used for demonstration purposes

'force_coefficient': 100,

'ema_decay': 0.999,

'clip_grad_norm': 10,

'loss_energy': 'mae',

'loss_force': 'l2mae',

}

# Dataset

dataset = [

{'src': train_src,

'normalize_labels': True,

"target_mean": mean,

"target_std": stdev,

"grad_target_mean": 0.0,

"grad_target_std": stdev

}, # train set

{'src': val_src}, # val set (optional)

]

Create the trainer#

trainer = OCPTrainer(

task=task,

model=copy.deepcopy(model), # copied for later use, not necessary in practice.

dataset=dataset,

optimizer=optimizer,

outputs={},

loss_functions={},

evaluation_metrics={},

name="s2ef",

identifier="S2EF-example",

run_dir=".", # directory to save results if is_debug=False. Prediction files are saved here so be careful not to override!

is_debug=False, # if True, do not save checkpoint, logs, or results

print_every=5,

seed=0, # random seed to use

logger="tensorboard", # logger of choice (tensorboard and wandb supported)

local_rank=0,

amp=True, # use PyTorch Automatic Mixed Precision (faster training and less memory usage),

)

2025-03-29 04:43:39 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/utils.py:1319: (WARNING): Detected old config, converting to new format. Consider updating to avoid potential incompatibilities.

2025-03-29 04:43:39 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:208: (INFO): amp: true

cmd:

checkpoint_dir: ./checkpoints/2025-03-29-04-43-44-S2EF-example

commit: core:b88b66e,experimental:NA

identifier: S2EF-example

logs_dir: ./logs/tensorboard/2025-03-29-04-43-44-S2EF-example

print_every: 5

results_dir: ./results/2025-03-29-04-43-44-S2EF-example

seed: 0

timestamp_id: 2025-03-29-04-43-44-S2EF-example

version: 0.1.dev1+gb88b66e

dataset:

format: lmdb

grad_target_mean: 0.0

grad_target_std: !!python/object/apply:numpy.core.multiarray.scalar

- &id001 !!python/object/apply:numpy.dtype

args:

- f8

- false

- true

state: !!python/tuple

- 3

- <

- null

- null

- null

- -1

- -1

- 0

- !!binary |

dPVlWhRA+D8=

key_mapping:

force: forces

y: energy

normalize_labels: true

src: data/s2ef/train_100

target_mean: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

zSXlDMrm3D8=

target_std: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

dPVlWhRA+D8=

transforms:

normalizer:

energy:

mean: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

zSXlDMrm3D8=

stdev: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

dPVlWhRA+D8=

forces:

mean: 0.0

stdev: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

dPVlWhRA+D8=

evaluation_metrics:

metrics:

energy:

- mae

forces:

- forcesx_mae

- forcesy_mae

- forcesz_mae

- mae

- cosine_similarity

- magnitude_error

misc:

- energy_forces_within_threshold

gp_gpus: null

gpus: 0

logger: tensorboard

loss_functions:

- energy:

coefficient: 1

fn: mae

- forces:

coefficient: 100

fn: l2mae

model:

activation: silu

atom_edge_interaction: true

atom_interaction: true

cbf:

name: spherical_harmonics

cutoff: 12.0

cutoff_aeaint: 12.0

cutoff_aint: 12.0

cutoff_qint: 12.0

direct_forces: true

edge_atom_interaction: true

emb_size_aint_in: 64

emb_size_aint_out: 64

emb_size_atom: 64

emb_size_cbf: 16

emb_size_edge: 64

emb_size_quad_in: 32

emb_size_quad_out: 32

emb_size_rbf: 16

emb_size_sbf: 32

emb_size_trip_in: 64

emb_size_trip_out: 64

envelope:

exponent: 5

name: polynomial

extensive: true

forces_coupled: false

max_neighbors: 30

max_neighbors_aeaint: 20

max_neighbors_aint: 1000

max_neighbors_qint: 8

name: gemnet_oc

num_after_skip: 2

num_atom: 3

num_atom_emb_layers: 2

num_before_skip: 2

num_blocks: 4

num_concat: 1

num_global_out_layers: 2

num_output_afteratom: 3

num_radial: 128

num_spherical: 7

output_init: HeOrthogonal

qint_tags:

- 1

- 2

quad_interaction: true

rbf:

name: gaussian

regress_forces: true

sbf:

name: legendre_outer

scale_file: ./gemnet-oc.pt

optim:

batch_size: 1

clip_grad_norm: 10

ema_decay: 0.999

eval_batch_size: 1

factor: 0.8

force_coefficient: 100

loss_energy: mae

loss_force: l2mae

lr_initial: 0.0005

max_epochs: 1

mode: min

num_workers: 2

optimizer: AdamW

optimizer_params:

amsgrad: true

patience: 3

scheduler: ReduceLROnPlateau

outputs:

energy:

level: system

forces:

eval_on_free_atoms: true

level: atom

train_on_free_atoms: true

relax_dataset: {}

slurm: {}

task:

dataset: lmdb

description: Regressing to energies and forces for DFT trajectories from OCP

eval_on_free_atoms: true

grad_input: atomic forces

labels:

- potential energy

metric: mae

train_on_free_atoms: true

type: regression

test_dataset: {}

trainer: s2ef

val_dataset:

src: data/s2ef/val_20

2025-03-29 04:43:39 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:557: (INFO): Loading model: gemnet_oc

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:568: (INFO): Loaded GemNetOC with 2596214 parameters.

/home/runner/work/fairchem/fairchem/src/fairchem/core/modules/scaling/compat.py:37: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

scale_dict = torch.load(path)

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/logger.py:175: (WARNING): log_summary for Tensorboard not supported

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:358: (INFO): Loading dataset: lmdb

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/datasets/base_dataset.py:94: (WARNING): Could not find dataset metadata.npz files in '[PosixPath('data/s2ef/train_100')]'

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:166: (WARNING): Disabled BalancedBatchSampler because num_replicas=1.

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:179: (WARNING): Failed to get data sizes, falling back to uniform partitioning. BalancedBatchSampler requires a dataset that has a metadata attributed with number of atoms.

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:88: (INFO): rank: 0: Sampler created...

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:200: (INFO): Created BalancedBatchSampler with sampler=<fairchem.core.common.data_parallel.StatefulDistributedSampler object at 0x7f2423bbbce0>, batch_size=1, drop_last=False

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/datasets/base_dataset.py:94: (WARNING): Could not find dataset metadata.npz files in '[PosixPath('data/s2ef/val_20')]'

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:166: (WARNING): Disabled BalancedBatchSampler because num_replicas=1.

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:179: (WARNING): Failed to get data sizes, falling back to uniform partitioning. BalancedBatchSampler requires a dataset that has a metadata attributed with number of atoms.

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:88: (INFO): rank: 0: Sampler created...

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:200: (INFO): Created BalancedBatchSampler with sampler=<fairchem.core.common.data_parallel.StatefulDistributedSampler object at 0x7f2423be7ec0>, batch_size=1, drop_last=False

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/modules/normalization/_load_utils.py:112: (INFO): normalizers checkpoint for targets ['energy'] have been saved to: ./checkpoints/2025-03-29-04-43-44-S2EF-example/normalizers.pt

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/modules/normalization/_load_utils.py:112: (INFO): normalizers checkpoint for targets ['energy', 'forces'] have been saved to: ./checkpoints/2025-03-29-04-43-44-S2EF-example/normalizers.pt

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:491: (INFO): Normalization values for output energy: mean=0.45158625849998374, rmsd=1.5156444102461508.

2025-03-29 04:43:41 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:491: (INFO): Normalization values for output forces: mean=0.0, rmsd=1.5156444102461508.

Train the model#

trainer.train()

2025-03-29 04:43:46 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 3.09e+01, forcesx_mae: 3.30e-01, forcesy_mae: 3.57e-01, forcesz_mae: 4.72e-01, forces_mae: 3.87e-01, forces_cosine_similarity: 1.86e-02, forces_magnitude_error: 6.38e-01, loss: 7.25e+01, lr: 5.00e-04, epoch: 5.00e-02, step: 5.00e+00

2025-03-29 04:43:51 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 8.43e+00, forcesx_mae: 1.68e-01, forcesy_mae: 1.81e-01, forcesz_mae: 2.19e-01, forces_mae: 1.89e-01, forces_cosine_similarity: 4.28e-02, forces_magnitude_error: 2.69e-01, loss: 3.04e+01, lr: 5.00e-04, epoch: 1.00e-01, step: 1.00e+01

2025-03-29 04:43:56 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 4.58e+00, forcesx_mae: 1.44e-01, forcesy_mae: 2.21e-01, forcesz_mae: 2.22e-01, forces_mae: 1.95e-01, forces_cosine_similarity: 8.30e-02, forces_magnitude_error: 2.61e-01, loss: 2.73e+01, lr: 5.00e-04, epoch: 1.50e-01, step: 1.50e+01

2025-03-29 04:44:01 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 2.41e+01, forcesx_mae: 3.02e-01, forcesy_mae: 1.15e+00, forcesz_mae: 5.77e-01, forces_mae: 6.76e-01, forces_cosine_similarity: -1.29e-01, forces_magnitude_error: 1.09e+00, loss: 1.00e+02, lr: 5.00e-04, epoch: 2.00e-01, step: 2.00e+01

2025-03-29 04:44:06 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 9.50e+00, forcesx_mae: 2.23e-01, forcesy_mae: 4.82e-01, forcesz_mae: 3.30e-01, forces_mae: 3.45e-01, forces_cosine_similarity: 1.79e-01, forces_magnitude_error: 4.21e-01, loss: 4.95e+01, lr: 5.00e-04, epoch: 2.50e-01, step: 2.50e+01

2025-03-29 04:44:11 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 3.92e+00, forcesx_mae: 9.23e-02, forcesy_mae: 1.45e-01, forcesz_mae: 1.41e-01, forces_mae: 1.26e-01, forces_cosine_similarity: 1.35e-01, forces_magnitude_error: 1.37e-01, loss: 1.81e+01, lr: 5.00e-04, epoch: 3.00e-01, step: 3.00e+01

2025-03-29 04:44:16 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 1.27e+00, forcesx_mae: 9.02e-02, forcesy_mae: 1.01e-01, forcesz_mae: 1.37e-01, forces_mae: 1.09e-01, forces_cosine_similarity: 1.75e-01, forces_magnitude_error: 1.57e-01, loss: 1.53e+01, lr: 5.00e-04, epoch: 3.50e-01, step: 3.50e+01

2025-03-29 04:44:21 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 3.54e+00, forcesx_mae: 1.15e-01, forcesy_mae: 2.91e-01, forcesz_mae: 1.59e-01, forces_mae: 1.88e-01, forces_cosine_similarity: 1.06e-01, forces_magnitude_error: 2.78e-01, loss: 2.80e+01, lr: 5.00e-04, epoch: 4.00e-01, step: 4.00e+01

2025-03-29 04:44:26 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 5.46e+00, forcesx_mae: 2.24e-01, forcesy_mae: 4.34e-01, forcesz_mae: 2.30e-01, forces_mae: 2.96e-01, forces_cosine_similarity: 6.79e-02, forces_magnitude_error: 5.51e-01, loss: 3.59e+01, lr: 5.00e-04, epoch: 4.50e-01, step: 4.50e+01

2025-03-29 04:44:31 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 1.35e+00, forcesx_mae: 9.93e-02, forcesy_mae: 1.12e-01, forcesz_mae: 1.72e-01, forces_mae: 1.28e-01, forces_cosine_similarity: 2.90e-01, forces_magnitude_error: 2.32e-01, loss: 1.78e+01, lr: 5.00e-04, epoch: 5.00e-01, step: 5.00e+01

2025-03-29 04:44:37 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 3.67e+00, forcesx_mae: 1.29e-01, forcesy_mae: 2.21e-01, forcesz_mae: 1.52e-01, forces_mae: 1.67e-01, forces_cosine_similarity: 8.87e-02, forces_magnitude_error: 1.98e-01, loss: 2.35e+01, lr: 5.00e-04, epoch: 5.50e-01, step: 5.50e+01

2025-03-29 04:44:42 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 1.80e+00, forcesx_mae: 1.35e-01, forcesy_mae: 1.67e-01, forcesz_mae: 1.72e-01, forces_mae: 1.58e-01, forces_cosine_similarity: 1.41e-01, forces_magnitude_error: 2.35e-01, loss: 1.84e+01, lr: 5.00e-04, epoch: 6.00e-01, step: 6.00e+01

2025-03-29 04:44:47 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 2.54e+00, forcesx_mae: 1.12e-01, forcesy_mae: 1.63e-01, forcesz_mae: 1.58e-01, forces_mae: 1.44e-01, forces_cosine_similarity: 2.15e-01, forces_magnitude_error: 1.86e-01, loss: 1.87e+01, lr: 5.00e-04, epoch: 6.50e-01, step: 6.50e+01

2025-03-29 04:44:52 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 5.65e+00, forcesx_mae: 8.02e-02, forcesy_mae: 7.68e-02, forcesz_mae: 1.02e-01, forces_mae: 8.65e-02, forces_cosine_similarity: 2.34e-01, forces_magnitude_error: 1.13e-01, loss: 1.31e+01, lr: 5.00e-04, epoch: 7.00e-01, step: 7.00e+01

2025-03-29 04:44:57 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 3.50e+00, forcesx_mae: 1.66e-01, forcesy_mae: 1.86e-01, forcesz_mae: 2.47e-01, forces_mae: 2.00e-01, forces_cosine_similarity: 1.88e-01, forces_magnitude_error: 3.13e-01, loss: 2.87e+01, lr: 5.00e-04, epoch: 7.50e-01, step: 7.50e+01

2025-03-29 04:45:02 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 3.48e+00, forcesx_mae: 9.29e-02, forcesy_mae: 1.61e-01, forcesz_mae: 1.26e-01, forces_mae: 1.27e-01, forces_cosine_similarity: 1.74e-01, forces_magnitude_error: 1.60e-01, loss: 1.78e+01, lr: 5.00e-04, epoch: 8.00e-01, step: 8.00e+01

2025-03-29 04:45:07 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 4.68e+00, forcesx_mae: 1.63e-01, forcesy_mae: 2.52e-01, forcesz_mae: 2.53e-01, forces_mae: 2.23e-01, forces_cosine_similarity: 1.71e-01, forces_magnitude_error: 3.72e-01, loss: 3.41e+01, lr: 5.00e-04, epoch: 8.50e-01, step: 8.50e+01

2025-03-29 04:45:12 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 1.49e+00, forcesx_mae: 4.96e-02, forcesy_mae: 7.33e-02, forcesz_mae: 5.31e-02, forces_mae: 5.86e-02, forces_cosine_similarity: 1.68e-01, forces_magnitude_error: 7.36e-02, loss: 7.56e+00, lr: 5.00e-04, epoch: 9.00e-01, step: 9.00e+01

2025-03-29 04:45:18 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 2.72e+00, forcesx_mae: 2.11e-01, forcesy_mae: 2.87e-01, forcesz_mae: 3.33e-01, forces_mae: 2.77e-01, forces_cosine_similarity: 2.29e-01, forces_magnitude_error: 5.15e-01, loss: 3.04e+01, lr: 5.00e-04, epoch: 9.50e-01, step: 9.50e+01

2025-03-29 04:45:22 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/ocp_trainer.py:194: (INFO): energy_forces_within_threshold: 0.00e+00, energy_mae: 7.20e-01, forcesx_mae: 3.24e-02, forcesy_mae: 3.54e-02, forcesz_mae: 5.30e-02, forces_mae: 4.03e-02, forces_cosine_similarity: 2.61e-01, forces_magnitude_error: 5.69e-02, loss: 5.80e+00, lr: 5.00e-04, epoch: 1.00e+00, step: 1.00e+02

2025-03-29 04:45:23 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:863: (INFO): Evaluating on val.

device 0: 0%| | 0/20 [00:00<?, ?it/s]

device 0: 5%|▌ | 1/20 [00:00<00:07, 2.52it/s]

device 0: 10%|█ | 2/20 [00:00<00:05, 3.33it/s]

device 0: 15%|█▌ | 3/20 [00:00<00:04, 3.82it/s]

device 0: 20%|██ | 4/20 [00:01<00:03, 4.22it/s]

device 0: 25%|██▌ | 5/20 [00:01<00:03, 4.48it/s]

device 0: 30%|███ | 6/20 [00:01<00:03, 4.61it/s]

device 0: 35%|███▌ | 7/20 [00:01<00:02, 4.66it/s]

device 0: 40%|████ | 8/20 [00:01<00:02, 4.77it/s]

device 0: 45%|████▌ | 9/20 [00:02<00:02, 4.86it/s]

device 0: 50%|█████ | 10/20 [00:02<00:02, 4.92it/s]

device 0: 55%|█████▌ | 11/20 [00:02<00:01, 4.96it/s]

device 0: 60%|██████ | 12/20 [00:02<00:01, 4.92it/s]

device 0: 65%|██████▌ | 13/20 [00:02<00:01, 4.99it/s]

device 0: 70%|███████ | 14/20 [00:03<00:01, 5.04it/s]

device 0: 75%|███████▌ | 15/20 [00:03<00:00, 5.06it/s]

device 0: 80%|████████ | 16/20 [00:03<00:00, 5.10it/s]

device 0: 85%|████████▌ | 17/20 [00:03<00:00, 4.99it/s]

device 0: 90%|█████████ | 18/20 [00:03<00:00, 5.07it/s]

device 0: 95%|█████████▌| 19/20 [00:04<00:00, 5.11it/s]

device 0: 100%|██████████| 20/20 [00:04<00:00, 5.13it/s]

device 0: 100%|██████████| 20/20 [00:04<00:00, 4.71it/s]

2025-03-29 04:45:27 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:905: (INFO): energy_forces_within_threshold: 0.0000, energy_mae: 9.1440, forcesx_mae: 0.3023, forcesy_mae: 0.2598, forcesz_mae: 0.4722, forces_mae: 0.3448, forces_cosine_similarity: 0.0188, forces_magnitude_error: 0.4904, loss: 53.1097, epoch: 1.0000

Validate the model#

Load the best checkpoint#

The checkpoints directory contains two checkpoint files:

best_checkpoint.pt- Model parameters corresponding to the best val performance during training. Used for predictions.checkpoint.pt- Model parameters and optimizer settings for the latest checkpoint. Used to continue training.

# The `best_checpoint.pt` file contains the checkpoint with the best val performance

checkpoint_path = os.path.join(trainer.config["cmd"]["checkpoint_dir"], "best_checkpoint.pt")

checkpoint_path

'./checkpoints/2025-03-29-04-43-44-S2EF-example/best_checkpoint.pt'

# Append the dataset with the test set. We use the same val set for demonstration.

# Dataset

dataset.append(

{'src': val_src}, # test set (optional)

)

dataset

[{'src': 'data/s2ef/train_100',

'normalize_labels': True,

'target_mean': 0.45158625849998374,

'target_std': 1.5156444102461508,

'grad_target_mean': 0.0,

'grad_target_std': 1.5156444102461508},

{'src': 'data/s2ef/val_20'},

{'src': 'data/s2ef/val_20'}]

pretrained_trainer = OCPTrainer(

task=task,

model=copy.deepcopy(model), # copied for later use, not necessary in practice.

dataset=dataset,

optimizer=optimizer,

outputs={},

loss_functions={},

evaluation_metrics={},

name="s2ef",

identifier="S2EF-val-example",

run_dir="./", # directory to save results if is_debug=False. Prediction files are saved here so be careful not to override!

is_debug=False, # if True, do not save checkpoint, logs, or results

print_every=5,

seed=0, # random seed to use

logger="tensorboard", # logger of choice (tensorboard and wandb supported)

local_rank=0,

amp=True, # use PyTorch Automatic Mixed Precision (faster training and less memory usage),

)

pretrained_trainer.load_checkpoint(checkpoint_path=checkpoint_path)

2025-03-29 04:45:27 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/utils.py:1319: (WARNING): Detected old config, converting to new format. Consider updating to avoid potential incompatibilities.

2025-03-29 04:45:27 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:208: (INFO): amp: true

cmd:

checkpoint_dir: ./checkpoints/2025-03-29-04-45-52-S2EF-val-example

commit: core:b88b66e,experimental:NA

identifier: S2EF-val-example

logs_dir: ./logs/tensorboard/2025-03-29-04-45-52-S2EF-val-example

print_every: 5

results_dir: ./results/2025-03-29-04-45-52-S2EF-val-example

seed: 0

timestamp_id: 2025-03-29-04-45-52-S2EF-val-example

version: 0.1.dev1+gb88b66e

dataset:

format: lmdb

grad_target_mean: 0.0

grad_target_std: !!python/object/apply:numpy.core.multiarray.scalar

- &id001 !!python/object/apply:numpy.dtype

args:

- f8

- false

- true

state: !!python/tuple

- 3

- <

- null

- null

- null

- -1

- -1

- 0

- !!binary |

dPVlWhRA+D8=

key_mapping:

force: forces

y: energy

normalize_labels: true

src: data/s2ef/train_100

target_mean: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

zSXlDMrm3D8=

target_std: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

dPVlWhRA+D8=

transforms:

normalizer:

energy:

mean: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

zSXlDMrm3D8=

stdev: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

dPVlWhRA+D8=

forces:

mean: 0.0

stdev: !!python/object/apply:numpy.core.multiarray.scalar

- *id001

- !!binary |

dPVlWhRA+D8=

evaluation_metrics:

metrics:

energy:

- mae

forces:

- forcesx_mae

- forcesy_mae

- forcesz_mae

- mae

- cosine_similarity

- magnitude_error

misc:

- energy_forces_within_threshold

gp_gpus: null

gpus: 0

logger: tensorboard

loss_functions:

- energy:

coefficient: 1

fn: mae

- forces:

coefficient: 100

fn: l2mae

model:

activation: silu

atom_edge_interaction: true

atom_interaction: true

cbf:

name: spherical_harmonics

cutoff: 12.0

cutoff_aeaint: 12.0

cutoff_aint: 12.0

cutoff_qint: 12.0

direct_forces: true

edge_atom_interaction: true

emb_size_aint_in: 64

emb_size_aint_out: 64

emb_size_atom: 64

emb_size_cbf: 16

emb_size_edge: 64

emb_size_quad_in: 32

emb_size_quad_out: 32

emb_size_rbf: 16

emb_size_sbf: 32

emb_size_trip_in: 64

emb_size_trip_out: 64

envelope:

exponent: 5

name: polynomial

extensive: true

forces_coupled: false

max_neighbors: 30

max_neighbors_aeaint: 20

max_neighbors_aint: 1000

max_neighbors_qint: 8

name: gemnet_oc

num_after_skip: 2

num_atom: 3

num_atom_emb_layers: 2

num_before_skip: 2

num_blocks: 4

num_concat: 1

num_global_out_layers: 2

num_output_afteratom: 3

num_radial: 128

num_spherical: 7

output_init: HeOrthogonal

qint_tags:

- 1

- 2

quad_interaction: true

rbf:

name: gaussian

regress_forces: true

sbf:

name: legendre_outer

scale_file: ./gemnet-oc.pt

optim:

batch_size: 1

clip_grad_norm: 10

ema_decay: 0.999

eval_batch_size: 1

factor: 0.8

force_coefficient: 100

loss_energy: mae

loss_force: l2mae

lr_initial: 0.0005

max_epochs: 1

mode: min

num_workers: 2

optimizer: AdamW

optimizer_params:

amsgrad: true

patience: 3

scheduler: ReduceLROnPlateau

outputs:

energy:

level: system

forces:

eval_on_free_atoms: true

level: atom

train_on_free_atoms: true

relax_dataset: {}

slurm: {}

task:

dataset: lmdb

description: Regressing to energies and forces for DFT trajectories from OCP

eval_on_free_atoms: true

grad_input: atomic forces

labels:

- potential energy

metric: mae

train_on_free_atoms: true

type: regression

test_dataset:

src: data/s2ef/val_20

trainer: s2ef

val_dataset:

src: data/s2ef/val_20

2025-03-29 04:45:27 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:557: (INFO): Loading model: gemnet_oc

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:568: (INFO): Loaded GemNetOC with 2596214 parameters.

/home/runner/work/fairchem/fairchem/src/fairchem/core/modules/scaling/compat.py:37: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

scale_dict = torch.load(path)

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/logger.py:175: (WARNING): log_summary for Tensorboard not supported

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/trainers/base_trainer.py:358: (INFO): Loading dataset: lmdb

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/datasets/base_dataset.py:94: (WARNING): Could not find dataset metadata.npz files in '[PosixPath('data/s2ef/train_100')]'

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:166: (WARNING): Disabled BalancedBatchSampler because num_replicas=1.

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:179: (WARNING): Failed to get data sizes, falling back to uniform partitioning. BalancedBatchSampler requires a dataset that has a metadata attributed with number of atoms.

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:88: (INFO): rank: 0: Sampler created...

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:200: (INFO): Created BalancedBatchSampler with sampler=<fairchem.core.common.data_parallel.StatefulDistributedSampler object at 0x7f245d8a7a40>, batch_size=1, drop_last=False

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/datasets/base_dataset.py:94: (WARNING): Could not find dataset metadata.npz files in '[PosixPath('data/s2ef/val_20')]'

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:166: (WARNING): Disabled BalancedBatchSampler because num_replicas=1.

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:179: (WARNING): Failed to get data sizes, falling back to uniform partitioning. BalancedBatchSampler requires a dataset that has a metadata attributed with number of atoms.

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:88: (INFO): rank: 0: Sampler created...

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:200: (INFO): Created BalancedBatchSampler with sampler=<fairchem.core.common.data_parallel.StatefulDistributedSampler object at 0x7f2423b65ca0>, batch_size=1, drop_last=False

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/datasets/base_dataset.py:94: (WARNING): Could not find dataset metadata.npz files in '[PosixPath('data/s2ef/val_20')]'

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:166: (WARNING): Disabled BalancedBatchSampler because num_replicas=1.

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:179: (WARNING): Failed to get data sizes, falling back to uniform partitioning. BalancedBatchSampler requires a dataset that has a metadata attributed with number of atoms.

2025-03-29 04:45:28 /home/runner/work/fairchem/fairchem/src/fairchem/core/common/data_parallel.py:88: (INFO): rank: 0: Sampler created...